Next-Generation Patient Simulators

In the United States, over 400,000 people die each year, and billions of dollars are lost due to preventable medical errors, the majority of them communication-related. In this project, we hope to reduce the incidence of these errors by creating high-fidelity robotic human patient simulators (HPS) that have the ability to exhibit realistic, clinically-relevant facial expressions, critical cues providers need to assess and treat patients.

In the United States, over 400,000 people die each year, and billions of dollars are lost due to preventable medical errors, the majority of them communication-related. In this project, we hope to reduce the incidence of these errors by creating high-fidelity robotic human patient simulators (HPS) that have the ability to exhibit realistic, clinically-relevant facial expressions, critical cues providers need to assess and treat patients.

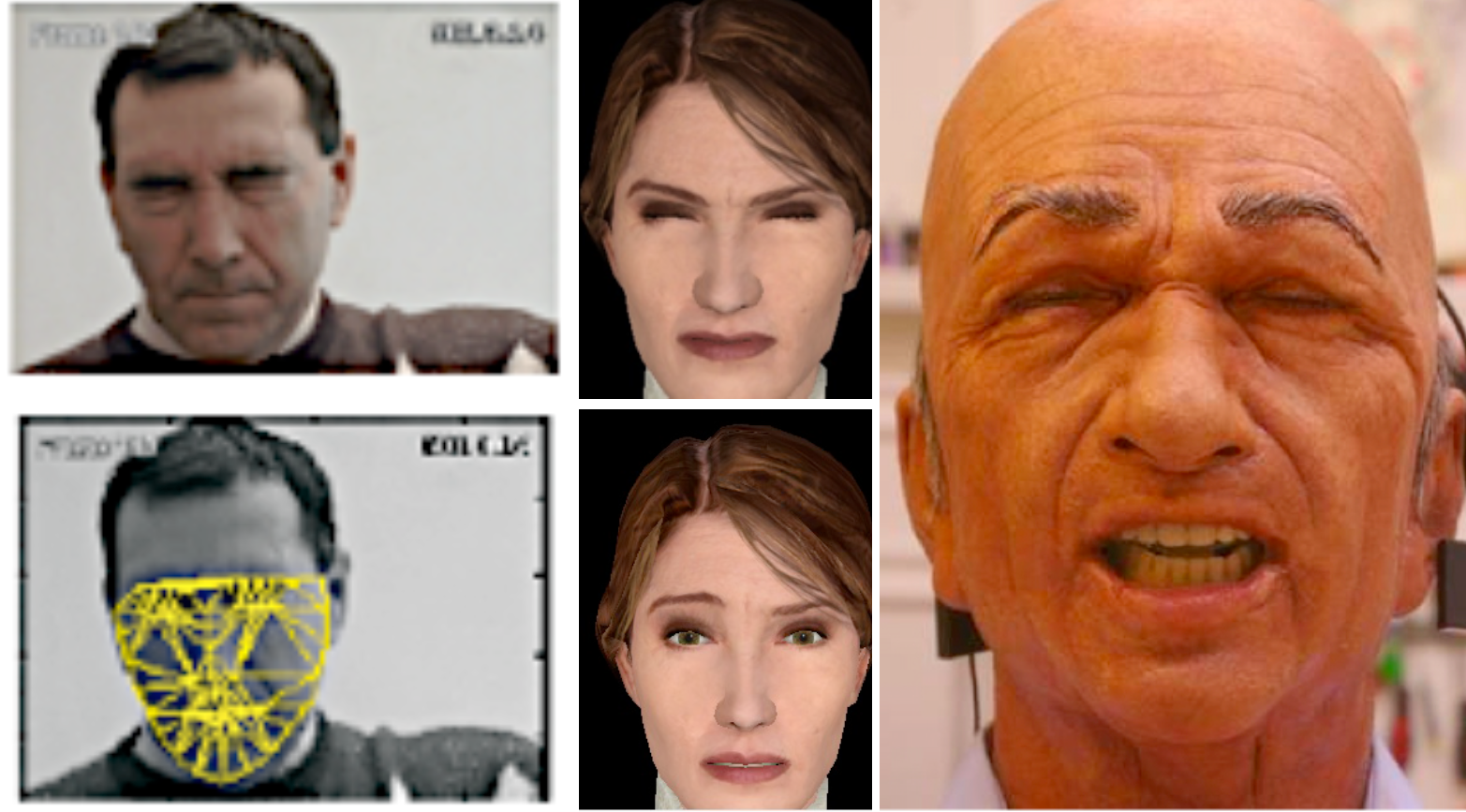

Although HPS systems are the most commonly used android robots in America, they currently do not exhibit realistic facial expressions, gaze, or mouth movements. Our goal in this project is to address this shortcoming by developing novel expression synthesis algorithms modeling the facial characteristics of people who have had strokes, have cerebral palsy or dystonia, as well as the states of pain and drowsiness. When modeled on a novel HPS system, these expressive robots will enable educators to run simulations currently impossible with commercially available technology, thereby leading to more realistic training experiences for doctors, nurses, and combat medics.

This work, in addition to improving quality and safety in healthcare, could enhance the understanding of these disorders and provide a means for educating society how to better interact with those suffering from these disabilities and/or to quickly recognize signs of stroke.

Our previous work in this area has involved the implementation of a novel performance-driven pain synthesis method using virtual avatars to first determine people's ability to identify pain using naturalistic facial expressions of pain. We are currently in the process of developing our android robotic head.

Selected Publications:

Moosaei, M., Hayes, C.J., and Riek, L.D. (2015) "Performing Facial Expression Synthesis on Robot Faces: A Real-time Software System". In Proceedings of the 4th International AISB Symposium on New Frontiers in Human-Robot Interaction (NF-HRI).

Moosaei, M., Hayes, C.J., and Riek, L.D. "Facial Expression Synthesis on Robots: An ROS Module". In Proceedings of the 10th ACM/IEEE Conference on Human-Robot Interaction (HRI), 2015. [PDF]

Moosaei, M., Gonzales, M.J., and Riek, L.D. (2014) "Naturalistic Pain Synthesis for Virtual Patients". In Proc. of the 14th International Conference on Intelligent Virtual Agents (IVA). [Acceptance Rate: 17%].

Gonzales, M.J., Moosaei, M., and Riek, L.D. (2013). "A Novel Method for Synthesizing Naturalistic Pain on Virtual Patients". Simulation in Healthcare, Vol. 8, Issue 6. [pdf]. [Best overall paper and Best student paper at IMSH 2014].

Janiw, A.E., Woodrick, L.S., and Riek, L.D. (2013). "Patient situational awareness support appears to fall with advancing levels of nursing student education". Simulation in Healthcare, Vol. 8, Issue 6. [pdf]

Huus, A. and Riek, L.D. (2012) "Assessing High-Fidelity Mannequin Facial Expressivity: A Preliminary Gap Analysis." Simulation in Healthcare. Vol. 7, No. 6., p. 541.

Martin, T.J, Rzepczynski, A.P., Riek, L.D. "Ask, Inform, or Act: Communication With a Robotic Patient Before Haptic Action". (2012). In Proceedings of the 7th ACM International Conference on Human-Robot Interaction (HRI). [pdf]