| |

|

Launched in 1948 by

Allen Funt, Candid Camera brought smiles to millions of people around

the world. During the show hidden cameras caught ordinary people

being themselves. There was no pretense; their reactions to the sometimes

bizarre situations set up by the Candid Camera staff were genuine

and funny. Today, using cameras to capture the actions and features

of people in a variety of settings is no laughing matter. Launched in 1948 by

Allen Funt, Candid Camera brought smiles to millions of people around

the world. During the show hidden cameras caught ordinary people

being themselves. There was no pretense; their reactions to the sometimes

bizarre situations set up by the Candid Camera staff were genuine

and funny. Today, using cameras to capture the actions and features

of people in a variety of settings is no laughing matter.

In fact,

the emphasis on human recognition and identification, specifically

recognizing terrorists and other security related issues, has grown

exponentially since 9-11. Authorities around the world are anxious

to quickly and accurately confirm an individual’s identity

or assess suspicious behaviors with or without that individual’s

consent or, in some cases, their knowledge. Biometrics is the tool

they are using.

A biometric

measurement can be taken of any part of the body. Ideally, the measurement

should be constant so that it doesn’t change

over time or with a person’s mood. It should be distinctive so

that no two people could exhibit the same features. As important, it

should be something that can be easily and quickly measured, cataloged,

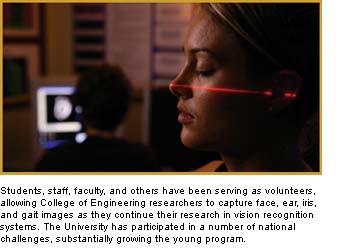

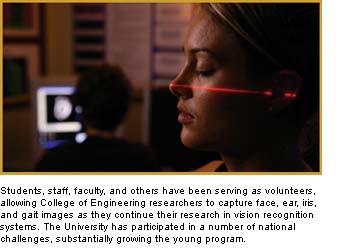

and referenced. A young and vibrant endeavor, the Notre Dame biometrics

program is contributing substantially to biometrics research and recognition

technologies:

Fingerprints are perhaps the best known form of biometrics.

Researchers in the College of Engineering are making breakthroughs

in face, ear, gait, and iris recognition technologies. Kevin

W. Bowyer, the Schubmehl-Prein Chair of the Department

of Computer Science and Engineering, and Patrick

J. Flynn, professor of computer science and

engineering, direct the college’s biometrics efforts. “Although

we started later than computer vision groups at other universities,” says

Flynn, “we have made significant advances in this area in a

relatively short time.” Both professors stress that the program’s

success is due largely to the work of undergraduates, graduate students,

and postdoctoral scholars. “Our projects have been national

in scope with the potential to have very high impact,” he says. “We

were able to identify niches and develop sufficient data to make

credible statistical inferences in many areas.”

Face Recognition

In spite of warnings to not judge a book by its cover, people around

the world have been judging faces since time began. Look at the

portraits in any museum. The faces reflect beauty, youth, wisdom,

age, innocence, and evil as seen through the artist’s eyes.

Today, instead of trusting what can be seen, facial recognition

systems are helping security forces distinguish the innocent from

the evil beyond what is readily visible. In spite of warnings to not judge a book by its cover, people around

the world have been judging faces since time began. Look at the

portraits in any museum. The faces reflect beauty, youth, wisdom,

age, innocence, and evil as seen through the artist’s eyes.

Today, instead of trusting what can be seen, facial recognition

systems are helping security forces distinguish the innocent from

the evil beyond what is readily visible.

As optimistic as that sounds,

there are considerable challenges current facial recognition systems

must overcome. First and foremost is the myth that face recognition

technologies are disguise proof. While there are technologies,

such as two- and three-dimensional scans and infrared and visible

light imaging, being studied in the Department of Computer Science

and Engineering that will help identify if a person is wearing

additional makeup or a prosthesis, they are not infallible. Proper

lighting is also a factor in face recognition systems, as are the

angle of the shot and distance of the subject from the camera.

Using

two- and three-dimensional images, Notre Dame researchers map the

topography (shape and depth) of a face. The outer corner of the

eye, the tip of the nose, and the center of the chin become landmark

points as a series of photos of an individual are taken: looking

directly at the camera with a normal expression, smiling, and with

spotlights. Using

two- and three-dimensional images, Notre Dame researchers map the

topography (shape and depth) of a face. The outer corner of the

eye, the tip of the nose, and the center of the chin become landmark

points as a series of photos of an individual are taken: looking

directly at the camera with a normal expression, smiling, and with

spotlights.

In fact, several sets of images of the same individual

have been taken with at least six and as many as 14 weeks between

capture sessions. Infrared images have also been recorded and added

to a gallery of images. The Notre Dame collection is one of the

largest databases of faces in the world, featuring images captured

repeatedly from students, staff, and faculty throughout the University

over a four-year period.

More than 75,000 images from the Notre

Dame collection are being used as part of the 2006 Face Recognition

Grand Challenge (FRGC), which is sponsored by the National Institute

of Standards and Technology, Department of Homeland Security Science

and Technology Directorate, the Federal Bureau of Investigations

(FBI), the Intelligence Technology Innovation Center, and the Technical

Support Working Group. Participating researchers are provided with

the images and a six-experiment challenge which incorporates three-dimensional

scans, high-resolution still images, multiple still images taken

under a variety of conditions, multi-modal face recognition, multiple

algorithms, and preprocessing algorithms. The goal of the FRGC is

to reduce the error rates in current systems so that they may be

deployed for real-world applications.

Ear Recognition

Ears are like fingerprints in that they are unique to each individual

and, without surgical intervention, do not change shape throughout

a person’s life. For this reason, ears have been used as

biometric tools but much less frequently than faces or fingerprints.

In fact, the United States Citizenship and Immigration Services,

formerly the Immigration and Naturalization Service, used to require

that every applicant provide two identical photos showing the entire

face, including the right ear and left eye, for use in visas or

passports. This policy was changed in August 2004 to comply with

the Border Security Act of 2003. All new photos must now exhibit

a full-frontal face position in color. Ears are like fingerprints in that they are unique to each individual

and, without surgical intervention, do not change shape throughout

a person’s life. For this reason, ears have been used as

biometric tools but much less frequently than faces or fingerprints.

In fact, the United States Citizenship and Immigration Services,

formerly the Immigration and Naturalization Service, used to require

that every applicant provide two identical photos showing the entire

face, including the right ear and left eye, for use in visas or

passports. This policy was changed in August 2004 to comply with

the Border Security Act of 2003. All new photos must now exhibit

a full-frontal face position in color.

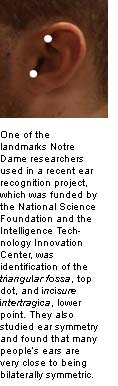

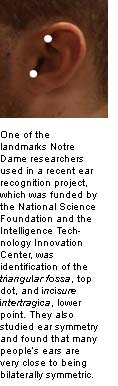

The change in photographic

requirements, however, does not negate the usefulness of the ear

as a tool in biometric recognition. In a recent Notre Dame project,

which was funded by the National Science Foundation and the Intelligence

Technology Innovation Center, Bowyer and Ping

Yan, a graduate student

in the Department of Computer Science and Engineering, captured

multiple images of ears from more than 400 individuals. One of

the largest experimental investigations of ear biometrics ever

conducted, Bowyer and Yan applied four different approaches to

test the accuracy of their methods. The change in photographic

requirements, however, does not negate the usefulness of the ear

as a tool in biometric recognition. In a recent Notre Dame project,

which was funded by the National Science Foundation and the Intelligence

Technology Innovation Center, Bowyer and Ping

Yan, a graduate student

in the Department of Computer Science and Engineering, captured

multiple images of ears from more than 400 individuals. One of

the largest experimental investigations of ear biometrics ever

conducted, Bowyer and Yan applied four different approaches to

test the accuracy of their methods.

Among other variables, they

studied three different landmark selection methods. A landmark

on an ear, similar to one on the ground, provides a constant point

of reference and measurement. The landmarks they used each featured

two points: the first measured the distance between the triangular

fossa and antitragus, the second between the triangular

fossa and

incisure intertragica, and the third measured the distance between

two lines ... one along the border between the ear and the face

and the other from the top of the ear to the bottom, reflecting

the size of the ear.

They then applied Principal Component Analysis,

also called “eigenear,” which

is widely used in face recognition, to test for intensity, depth,

and edge matching. Bowyer and Yan found that ears are geometrically

complex and require a complex set of algorithms to assess the collected

data.

Although the experiment was conducted under controlled circumstances,

it appears that ear recognition based on a three-dimensional approach

is more than 90 percent accurate. However, the results also suggest

that while there is no significant difference between recognition performance

using the ear versus the face, using both the ear and the face in a

multi-modal system results in a statistically significant improvement

in recognition. |

|

Wise as an owl,

blind as a bat, clever as a fox, boarish, catlike, having horse

sense ... people are often described in relation to animals.

It’s not surprising that researchers might apply similar

terms when attempting to categorize specific groups. In fact,

in 1998 George R. Doddington, who was at that time the senior

principal scientist with SRI International and visiting scientist

at the National Institute of Standards and Technology, co-authored

a paper on performance variability in speaker recognition systems

in which he suggested that all people could be classified into

one of four groups in regards to their speech patterns and how

well they could be identified by such systems.

Doddington’s

menagerie was based on sheep, goats, lambs, and wolves. Sheep

represented the majority of the population. Readily distinguishable

one from another, they were easy for recognition systems to identify

and model. Goats were speakers who were more difficult for a

system to recognize. A goat, for example, might not provide a

match to itself from one day to the next. Lambs adversely affected

the performance of a speech recognition system, because they

were so similar one to another and were very easy to imitate.

Wolves, according to the Doddington scale, also negatively affected

recognition systems, because they were great imitators. Doddington’s

menagerie was based on sheep, goats, lambs, and wolves. Sheep

represented the majority of the population. Readily distinguishable

one from another, they were easy for recognition systems to identify

and model. Goats were speakers who were more difficult for a

system to recognize. A goat, for example, might not provide a

match to itself from one day to the next. Lambs adversely affected

the performance of a speech recognition system, because they

were so similar one to another and were very easy to imitate.

Wolves, according to the Doddington scale, also negatively affected

recognition systems, because they were great imitators.

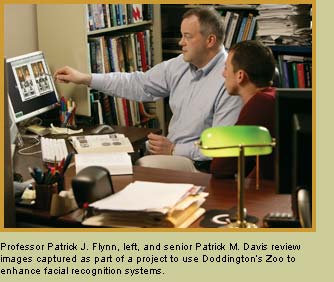

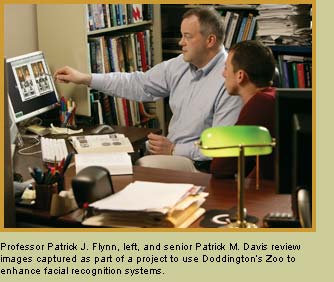

Notre Dame

researchers are extending Doddington’s concept

to facial recognition systems. “It is one of the most interesting

new projects in our lab [the Computer Vision Laboratory],” says

Patrick J. Flynn, professor of computer science and engineering. “Not

only are we examining Doddington’s Zoo in the context of

facial biometrics, but students are playing a key role in the process.”

Flynn

and undergraduates Michael G. Wittman and Patrick

M. Davis are

hoping to determine if the zoo exists and to identify what percentage

of the population can be found in each of the categories. Perhaps

more interesting to the team is the possibility of applying the

zoo concept to other biometric systems, such as iris recognition

or three-dimensional face shape.

In addition to his work on this

and other vision recognition projects, Wittman, a senior in the

Department of Computer Science and Engineering, interned with the

Intelligence and Information Systems division of Raytheon in Falls

Church, Virginia, during summer 2005. He will begin working as

a full-time Raytheon employee, a software engineer, in the Internal

Biometrics research and development program later this summer.

|

|

Gait

Recognition

What do the story of the prodigal son and Ashley Wilkes’ homecoming

in Gone with the Wind have in common? Both the young man’s father

and Melanie Wilkes identified a loved one from a distance. Their recognition

was not solely for dramatic effect; the manner in which an individual

walks can be an identifying feature. The most recent evidence of this

was described in an article titled “Visual Analysis of Gait as

a Cue to Identity,” which was published in the December 1999

issue of Applied Cognitive Psychology. The article indicated something

known to anyone waiting and watching for a loved one’s return:

humans can, with very brief exposure and under a variety of lighting

conditions, identify other individuals by their gait. What do the story of the prodigal son and Ashley Wilkes’ homecoming

in Gone with the Wind have in common? Both the young man’s father

and Melanie Wilkes identified a loved one from a distance. Their recognition

was not solely for dramatic effect; the manner in which an individual

walks can be an identifying feature. The most recent evidence of this

was described in an article titled “Visual Analysis of Gait as

a Cue to Identity,” which was published in the December 1999

issue of Applied Cognitive Psychology. The article indicated something

known to anyone waiting and watching for a loved one’s return:

humans can, with very brief exposure and under a variety of lighting

conditions, identify other individuals by their gait.

Extensive research

has been done to address how accurately a subject could be identified

by the characteristics of his or her walk: How even are the strides?

Is there a noticeable limp? Studies have also been conducted to determine

how variables, such as terrain or load might affect the way a person

walks.

Little research has been done from the opposite perspective.

For example, an important question in today’s climate might be, “Can

an analysis of gait determine if a subject is carrying a concealed

load, such as a bomb?“ This is the question James

M. Ward, a

2004 graduate of the Department of Computer Science and Engineering

now working at GE Aircraft; Michael G. Wittman, a senior in the department;

and Flynn attempted to answer in their project, “Visual Analysis

of the Effects of Load Carriage on Gait.“

In order to conduct

the project in a controlled environment, the Notre Dame team collected

all of the images on campus, at the Stepan Center and at the Rolfs

Sports Recreation Center, using an area that was 2 ft. wide by almost

40 ft. long. Team members selected subjects who were 18 to 22 years

old, between 5’6” and 6’3” tall,

and between 140 and 200 lbs. Each participant wore black clothing on

which video markers were placed, and each took a practice walk down

the project path before filming began.

As they were taped the first

time down the runway, participants simply walked the length of the

path. After donning a 31-lb. vest, a second trip was recorded. Each

subject was then fitted with a 42-lb. vest and filmed for a third time.

After

converting the video clips, software was used to develop x and y coordinates

of all markers, and individual frames were analyzed to determine if

any trends could be found. According to the data, the upper body’s

joint trajectories vary greatly from person to person, but the lower

body acts in a much more predictable way, compensating for the extra

weight. Flynn stresses that while additional data and analysis is needed

to confirm these trends, gait is definitely something that deserves

continued exploration.

Iris Recognition

Although some of the biometric techniques depicted by Hollywood are

more science fiction than fact, iris recognition is one of the

most accurate forms of identification known to man. The probability

of the irises from two individuals being identical is estimated

at 1 in 1072. Even identical twins have unique irises. Although some of the biometric techniques depicted by Hollywood are

more science fiction than fact, iris recognition is one of the

most accurate forms of identification known to man. The probability

of the irises from two individuals being identical is estimated

at 1 in 1072. Even identical twins have unique irises.

Iris recognition

technology differs from retinal pattern technology. A colorful

organ that surrounds the pupil, the iris acts like a shutter regulating

the amount of light that the eye receives. It features fibers,

furrows, freckles, and other patterns useful in a biometrics context.

An iris is externally visible; a retina is not. Located in the

back of the eye behind the cornea, lens, iris, and pupil, the retina

is connected to the optic nerve. It helps process images. The retina

also provides accurate biometric information, because of the unique

vessel patterns located in it. Like the iris, retinal patterns

are also thought to remain constant throughout a person’s

life.

Benefits of iris recognition include the fact that it is a non-invasive

form of technology. High-resolution cameras quickly capture, store,

and analyze images without touching a person or damaging the eye.

The challenge faced by iris recognition systems is rooted in the

initial image capture. Currently, researchers acquire images from

a stationary subject positioned within inches of a camera. In controlled

facilities, such as a corporate laboratory or military base, the

subject might sit or stand six to 12 inches away from a camera’s

lens. Many controlled facilities also ask subjects to remove their

glasses, although it is not clear how an image is affected if a subject

is wearing glasses or contacts. Benefits of iris recognition include the fact that it is a non-invasive

form of technology. High-resolution cameras quickly capture, store,

and analyze images without touching a person or damaging the eye.

The challenge faced by iris recognition systems is rooted in the

initial image capture. Currently, researchers acquire images from

a stationary subject positioned within inches of a camera. In controlled

facilities, such as a corporate laboratory or military base, the

subject might sit or stand six to 12 inches away from a camera’s

lens. Many controlled facilities also ask subjects to remove their

glasses, although it is not clear how an image is affected if a subject

is wearing glasses or contacts.

The cameras in automated teller machines,

which can also capture iris images, operate best when a subject is

17 to 19 inches away from the lens. Most important in either application,

the subject must hold completely still, remaining within camera range.

There must also be proper lighting.

While these types of systems might

work well in a corporate laboratory or on a military base, where

individuals expect to be scanned for identification purposes, they

are not yet practical for public places, where people are moving

around and lighting may not be consistent from one side of a room

to another. These are some of the factors being addressed by the

Iris Challenge Evaluation (ICE), an independent evaluation of iris

recognition technology being conducted by the National Institute

of Standards and Technology.

Researchers at Notre Dame, in conjunction

with ICE sponsors (the FBI, Intelligence Technology Innovation Center,

National Institute of Justice, Technical Support Working Group, and

the Transportation Security Administration of the U.S. Department

of Homeland Security), have provided image data sets and software

that ICE participants (academia, industry, and research institutes)

will use as they assess and measure current iris recognition efforts.

The goal of the challenge is to advance iris recognition technology

so that useful images may be acquired at greater distances, from

a variety of angles, under limited lighting conditions, and with

or without the subject’s

knowledge.

Multi-modal Biometrics

Although the adage “two heads are better than one” was

written long before the advent of biometrics, Bowyer and Flynn contend

it applies quite well. In fact, they suggest that the future of biometrics

lies in the use of multi-modal systems. For example, iris recognition

alone cannot be applied to everyone. One in 17,000 people in the

U.S. have some type of albinism, which affects the pigment in their

irises. Acquiring an accurate image of an albino’s iris is

difficult. Involuntary eye movement can also confuse iris recognition

systems. Speaker recognition systems will never work on a mute person.

Gait technologies may trigger a false positive for someone who has

back problems or has had hip replacement surgery or polio. Because

of the involuntary motion associated with Parkinson’s disease,

the faces of people afflicted with that disease are difficult to

capture. Another segment of the population wears veils for religious

reasons, which means that facial recognition systems will not work

on them either. Although the adage “two heads are better than one” was

written long before the advent of biometrics, Bowyer and Flynn contend

it applies quite well. In fact, they suggest that the future of biometrics

lies in the use of multi-modal systems. For example, iris recognition

alone cannot be applied to everyone. One in 17,000 people in the

U.S. have some type of albinism, which affects the pigment in their

irises. Acquiring an accurate image of an albino’s iris is

difficult. Involuntary eye movement can also confuse iris recognition

systems. Speaker recognition systems will never work on a mute person.

Gait technologies may trigger a false positive for someone who has

back problems or has had hip replacement surgery or polio. Because

of the involuntary motion associated with Parkinson’s disease,

the faces of people afflicted with that disease are difficult to

capture. Another segment of the population wears veils for religious

reasons, which means that facial recognition systems will not work

on them either.

In these cases, should authorities violate civil liberties

with additional and more invasive searches? Or can using a series

of biometric measurements, a multi-modal approach, help identify

potential threats while maintaining personal freedoms? The benefit

of multi-modal techniques, according to Bowyer and Flynn, is that

they offer a robustness of data which provides more accurate assessments

while maintaining most of those freedoms. But there is no simple

answer. Still in its infancy, biometrics will continue to evolve

to meet the challenges of identification, verification, and security

that are prevalent in today’s world. It will continue

to help nations assess what cannot always be seen with the naked eye.

For more information about computer recognition technology at Notre

Dame, visit http://www.cse.nd.edu/~cvrl. |

|

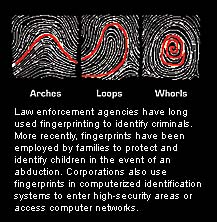

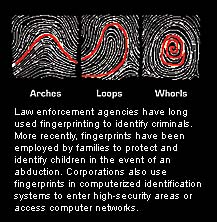

In

the field of biometrics, fingerprinting is the oldest and, to

date, the most successfully applied technique. The use of fingerprints

as a means of identification, such as a legal and binding signature

on official documents, is recorded as early as 1000 B.C. In 1686

Marcello Malpighi, a professor of anatomy at the University of

Bologna, was the first to document and type fingerprints. But

it wasn’t until 1880 that Dr. Henry Faulds suggested that

fingerprints could be used as a means of personal identification.

In an article published in “Nature,” he outlined

a distinct classification system and described how best to capture

and record prints.

Building upon Faulds’ work Sir Francis

Galton became the first to prove that a person’s fingerprints

remain the same throughout his life and that no two individuals

have the same prints. In fact, he calculated that the odds of

finding identical prints were approximately 1 in 64 billion.

In 1892 Galton published Fingerprints, a book detailing the types

(arch, loop, and whorl) and characteristics (minutia) of fingerprints,

which are still used today.

Fingerprints, however, were not widely

used to identify criminals until 1901, when Sir Edward Richard

Henry began training Scotland Yard investigators in the Henry

Fingerprint Classification System, a uniform system of identification

that featured 1,024 classifications.

Another milestone in law

enforcement technology occurred in 1902, when Alphonse Bertillon,

director of the Bureau of Identification of the Paris Police,

made the first identification of a criminal by matching an unknown

print found at a crime scene with a print already on file. By

1903 fingerprinting technology was being used in the United States,

and a few years later a central storage location for North American

fingerprints was established in Ottawa, Canada. At its opening

it held 2,000 sets of prints.

Congress established the Identification

Division of the Federal Bureau of Investigation (FBI) in 1924,

the basis of the bureau’s

fingerprint repository, and by 1946 the division had processed

100 million fingerprint cards. By 1971 that number had doubled.

A

computerized system of storing and cross-referencing criminal

prints, the Automated Fingerprint Identification System, was established

in the 1990s, enabling law enforcement officers to search millions

of fingerprint files in a matter of minutes. By 1999 the FBI had

phased out the use of fingerprint cards. Although the cards are

still kept on file, the computerized fingerprint records for more

than 33 million criminals can be accessed in a matter of seconds.

In

addition to applications in law enforcement, fingerprinting technologies

are being used in child identification kits for parents. They are

also employed by companies in a variety of different applications

and markets as biometric sensors. For example, companies are spending

millions of dollars to erect firewalls and install intruder detection

systems on their computer networks, but many are also replacing

passwords with fingerprint sensors. These sensors can control laptops,

computer mice, portable hard drives, and are even being used to

personalize wireless devices. In some cell phone systems different

fingers operate different speed dials or open separate buddy lists.

Fingerprint biometrics can also control door locks and smart card

readers. In

addition to applications in law enforcement, fingerprinting technologies

are being used in child identification kits for parents. They are

also employed by companies in a variety of different applications

and markets as biometric sensors. For example, companies are spending

millions of dollars to erect firewalls and install intruder detection

systems on their computer networks, but many are also replacing

passwords with fingerprint sensors. These sensors can control laptops,

computer mice, portable hard drives, and are even being used to

personalize wireless devices. In some cell phone systems different

fingers operate different speed dials or open separate buddy lists.

Fingerprint biometrics can also control door locks and smart card

readers.

Tried and true, fingerprinting is not the ultimate biometric. Nor

can it be used to accurately identify everyone. The fingers of

bricklayers, rock climbing enthusiasts, senior citizens, and toddlers

often lack the ridges needed to produce a defined print. Other

errors, technological or human, can also plague the identification

process. For example, in May 2004 the FBI arrested Brandon Mayfield

as a material witness in the March 2004 commuter train attacks

in Madrid. FBI officials said Mayfield, an Oregon attorney who

was also Muslim, had been identified as the source of a fingerprint

on a bag of detonators connected to the attack. Mayfield was released

two weeks later, after the FBI examined the prints of a suspect

the Spanish National Police had correctly identified as the source

of the print. |

|

|

Patrick Henry is

famous for saying, “Give me liberty or give me death.” But

he also said, “Guard with jealous attention the public

liberty. Suspect everyone who approaches that jewel. Unfortunately,

nothing will preserve it but downright force. Whenever you give

up that force, you are inevitably ruined.” In the Historical

Review of Pennsylvania, Benjamin Franklin wrote, “They

that can give up essential liberty to obtain a little temporary

safety deserve neither liberty nor safety.” Obviously,

both founding fathers valued liberty, perhaps above all else.

Discussing

his memorial tribute “Let’s Roll” in

the April 2002 issue of USA Today, Neil Young called Franklin’s

love of personal freedom into question. He said, “Benjamin

Franklin said that anyone who gives up essential liberties to preserve

freedom is a fool, but maybe he didn’t conceive of nuclear

war and dirty bombs.”

It’s probable that neither Franklin

nor Henry envisioned a day when a jet could fell a building and

kill almost 3,000 people in a matter of minutes. Neither did they

imagine a device small enough to hold in the palm of a hand but

powerful enough to record a conversation inside a building or behind

a wall. They certainly could not have conceived of removing their

shoes and placing them, along with other personal items, in a scanner

prior to boarding an aircraft. And, even though espionage was part

of their world, it’s likely they never considered the possibility

of a suicide bomber or any kind of environmentally toxic device.

Technology was as foreign to them as it is familiar to today’s

society.

The

question raised, however, is the same: Is security (safety) or

personal liberty (privacy) more important? On September 12, 2001,

most Americans would have gladly sacrificed a little convenience,

even a little privacy, to change the horror of the previous day.

Powerless to change the past, they (we) continue to search for

answers, philosophical and technological, so that this particular

piece of history can never repeat itself.

|

|

|

|

Launched in 1948 by

Allen Funt, Candid Camera brought smiles to millions of people around

the world. During the show hidden cameras caught ordinary people

being themselves. There was no pretense; their reactions to the sometimes

bizarre situations set up by the Candid Camera staff were genuine

and funny. Today, using cameras to capture the actions and features

of people in a variety of settings is no laughing matter.

Launched in 1948 by

Allen Funt, Candid Camera brought smiles to millions of people around

the world. During the show hidden cameras caught ordinary people

being themselves. There was no pretense; their reactions to the sometimes

bizarre situations set up by the Candid Camera staff were genuine

and funny. Today, using cameras to capture the actions and features

of people in a variety of settings is no laughing matter. What do the story of the prodigal son and Ashley Wilkes’ homecoming

in Gone with the Wind have in common? Both the young man’s father

and Melanie Wilkes identified a loved one from a distance. Their recognition

was not solely for dramatic effect; the manner in which an individual

walks can be an identifying feature. The most recent evidence of this

was described in an article titled “Visual Analysis of Gait as

a Cue to Identity,” which was published in the December 1999

issue of Applied Cognitive Psychology. The article indicated something

known to anyone waiting and watching for a loved one’s return:

humans can, with very brief exposure and under a variety of lighting

conditions, identify other individuals by their gait.

What do the story of the prodigal son and Ashley Wilkes’ homecoming

in Gone with the Wind have in common? Both the young man’s father

and Melanie Wilkes identified a loved one from a distance. Their recognition

was not solely for dramatic effect; the manner in which an individual

walks can be an identifying feature. The most recent evidence of this

was described in an article titled “Visual Analysis of Gait as

a Cue to Identity,” which was published in the December 1999

issue of Applied Cognitive Psychology. The article indicated something

known to anyone waiting and watching for a loved one’s return:

humans can, with very brief exposure and under a variety of lighting

conditions, identify other individuals by their gait. In spite of warnings to not judge a book by its cover, people around

the world have been judging faces since time began. Look at the

portraits in any museum. The faces reflect beauty, youth, wisdom,

age, innocence, and evil as seen through the artist’s eyes.

Today, instead of trusting what can be seen, facial recognition

systems are helping security forces distinguish the innocent from

the evil beyond what is readily visible.

In spite of warnings to not judge a book by its cover, people around

the world have been judging faces since time began. Look at the

portraits in any museum. The faces reflect beauty, youth, wisdom,

age, innocence, and evil as seen through the artist’s eyes.

Today, instead of trusting what can be seen, facial recognition

systems are helping security forces distinguish the innocent from

the evil beyond what is readily visible. Using

two- and three-dimensional images, Notre Dame researchers map the

topography (shape and depth) of a face. The outer corner of the

eye, the tip of the nose, and the center of the chin become landmark

points as a series of photos of an individual are taken: looking

directly at the camera with a normal expression, smiling, and with

spotlights.

Using

two- and three-dimensional images, Notre Dame researchers map the

topography (shape and depth) of a face. The outer corner of the

eye, the tip of the nose, and the center of the chin become landmark

points as a series of photos of an individual are taken: looking

directly at the camera with a normal expression, smiling, and with

spotlights. Ears are like fingerprints in that they are unique to each individual

and, without surgical intervention, do not change shape throughout

a person’s life. For this reason, ears have been used as

biometric tools but much less frequently than faces or fingerprints.

In fact, the United States Citizenship and Immigration Services,

formerly the Immigration and Naturalization Service, used to require

that every applicant provide two identical photos showing the entire

face, including the right ear and left eye, for use in visas or

passports. This policy was changed in August 2004 to comply with

the Border Security Act of 2003. All new photos must now exhibit

a full-frontal face position in color.

Ears are like fingerprints in that they are unique to each individual

and, without surgical intervention, do not change shape throughout

a person’s life. For this reason, ears have been used as

biometric tools but much less frequently than faces or fingerprints.

In fact, the United States Citizenship and Immigration Services,

formerly the Immigration and Naturalization Service, used to require

that every applicant provide two identical photos showing the entire

face, including the right ear and left eye, for use in visas or

passports. This policy was changed in August 2004 to comply with

the Border Security Act of 2003. All new photos must now exhibit

a full-frontal face position in color. The change in photographic

requirements, however, does not negate the usefulness of the ear

as a tool in biometric recognition. In a recent Notre Dame project,

which was funded by the National Science Foundation and the Intelligence

Technology Innovation Center, Bowyer and

The change in photographic

requirements, however, does not negate the usefulness of the ear

as a tool in biometric recognition. In a recent Notre Dame project,

which was funded by the National Science Foundation and the Intelligence

Technology Innovation Center, Bowyer and

Doddington’s

menagerie was based on sheep, goats, lambs, and wolves. Sheep

represented the majority of the population. Readily distinguishable

one from another, they were easy for recognition systems to identify

and model. Goats were speakers who were more difficult for a

system to recognize. A goat, for example, might not provide a

match to itself from one day to the next. Lambs adversely affected

the performance of a speech recognition system, because they

were so similar one to another and were very easy to imitate.

Wolves, according to the Doddington scale, also negatively affected

recognition systems, because they were great imitators.

Doddington’s

menagerie was based on sheep, goats, lambs, and wolves. Sheep

represented the majority of the population. Readily distinguishable

one from another, they were easy for recognition systems to identify

and model. Goats were speakers who were more difficult for a

system to recognize. A goat, for example, might not provide a

match to itself from one day to the next. Lambs adversely affected

the performance of a speech recognition system, because they

were so similar one to another and were very easy to imitate.

Wolves, according to the Doddington scale, also negatively affected

recognition systems, because they were great imitators. Although some of the biometric techniques depicted by Hollywood are

more science fiction than fact, iris recognition is one of the

most accurate forms of identification known to man. The probability

of the irises from two individuals being identical is estimated

at 1 in 10

Although some of the biometric techniques depicted by Hollywood are

more science fiction than fact, iris recognition is one of the

most accurate forms of identification known to man. The probability

of the irises from two individuals being identical is estimated

at 1 in 10 Benefits of iris recognition include the fact that it is a non-invasive

form of technology. High-resolution cameras quickly capture, store,

and analyze images without touching a person or damaging the eye.

The challenge faced by iris recognition systems is rooted in the

initial image capture. Currently, researchers acquire images from

a stationary subject positioned within inches of a camera. In controlled

facilities, such as a corporate laboratory or military base, the

subject might sit or stand six to 12 inches away from a camera’s

lens. Many controlled facilities also ask subjects to remove their

glasses, although it is not clear how an image is affected if a subject

is wearing glasses or contacts.

Benefits of iris recognition include the fact that it is a non-invasive

form of technology. High-resolution cameras quickly capture, store,

and analyze images without touching a person or damaging the eye.

The challenge faced by iris recognition systems is rooted in the

initial image capture. Currently, researchers acquire images from

a stationary subject positioned within inches of a camera. In controlled

facilities, such as a corporate laboratory or military base, the

subject might sit or stand six to 12 inches away from a camera’s

lens. Many controlled facilities also ask subjects to remove their

glasses, although it is not clear how an image is affected if a subject

is wearing glasses or contacts. Although the adage “two heads are better than one” was

written long before the advent of biometrics, Bowyer and Flynn contend

it applies quite well. In fact, they suggest that the future of biometrics

lies in the use of multi-modal systems. For example, iris recognition

alone cannot be applied to everyone. One in 17,000 people in the

U.S. have some type of albinism, which affects the pigment in their

irises. Acquiring an accurate image of an albino’s iris is

difficult. Involuntary eye movement can also confuse iris recognition

systems. Speaker recognition systems will never work on a mute person.

Gait technologies may trigger a false positive for someone who has

back problems or has had hip replacement surgery or polio. Because

of the involuntary motion associated with Parkinson’s disease,

the faces of people afflicted with that disease are difficult to

capture. Another segment of the population wears veils for religious

reasons, which means that facial recognition systems will not work

on them either.

Although the adage “two heads are better than one” was

written long before the advent of biometrics, Bowyer and Flynn contend

it applies quite well. In fact, they suggest that the future of biometrics

lies in the use of multi-modal systems. For example, iris recognition

alone cannot be applied to everyone. One in 17,000 people in the

U.S. have some type of albinism, which affects the pigment in their

irises. Acquiring an accurate image of an albino’s iris is

difficult. Involuntary eye movement can also confuse iris recognition

systems. Speaker recognition systems will never work on a mute person.

Gait technologies may trigger a false positive for someone who has

back problems or has had hip replacement surgery or polio. Because

of the involuntary motion associated with Parkinson’s disease,

the faces of people afflicted with that disease are difficult to

capture. Another segment of the population wears veils for religious

reasons, which means that facial recognition systems will not work

on them either. In

addition to applications in law enforcement, fingerprinting technologies

are being used in child identification kits for parents. They are

also employed by companies in a variety of different applications

and markets as biometric sensors. For example, companies are spending

millions of dollars to erect firewalls and install intruder detection

systems on their computer networks, but many are also replacing

passwords with fingerprint sensors. These sensors can control laptops,

computer mice, portable hard drives, and are even being used to

personalize wireless devices. In some cell phone systems different

fingers operate different speed dials or open separate buddy lists.

Fingerprint biometrics can also control door locks and smart card

readers.

In

addition to applications in law enforcement, fingerprinting technologies

are being used in child identification kits for parents. They are

also employed by companies in a variety of different applications

and markets as biometric sensors. For example, companies are spending

millions of dollars to erect firewalls and install intruder detection

systems on their computer networks, but many are also replacing

passwords with fingerprint sensors. These sensors can control laptops,

computer mice, portable hard drives, and are even being used to

personalize wireless devices. In some cell phone systems different

fingers operate different speed dials or open separate buddy lists.

Fingerprint biometrics can also control door locks and smart card

readers.