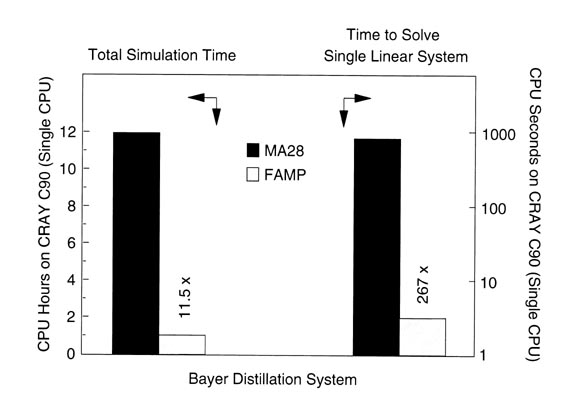

This example (Figure 4, right) involves a dynamic simulation run using Aspen Technology's SPEEDUP package on a Cray C90 vector machine not too many years ago, and shows what happens when you change sparse matrix solvers in order to try to take better advantage of vectorization. This was a comparison done by Steve Zitney in collaboration with people at Bayer [1]. What this shows is that with the conventional sparse matrix solver of the time, MA28, the simulation took about 12 hours, which, since this was a simulation of a much shorter period of actual plant time, was not a good thing. By changing to the FAMP solver, which was developed by Steve Zitney in my group, and which takes advantage of the vector computer architecture, the simulation time was reduced by an order of magnitude, and the time to solve a single linear system by two orders of magnitude.