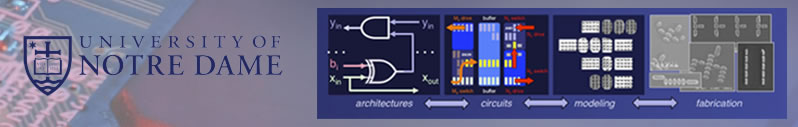

The primary focus of my work is on computation beyond the CMOS field effect transistor – which may ultimately be limited by physics, cost, and manufacturing-related issues.

Nanomagnet

Logic

My research efforts have primarily targeted computational

systems where magnetic elements with nanometer feature sizes are used

to both process and store binary information. Why is this good? In

conventional electronics, most computation is charge-based. A power

supply maintains state, and thousands of electrons (each at

~40 kT) are needed to perform a single function. Alternatively, nanomagnet

logic (NML) devices can process information in a cellular-automata

like architecture, could dissipate < 40 kT per switching event for

a gate operation,

will retain state without power, and are intrinsically radiation hard.

Thus, looking up to applications, NML has the potential to mitigate

increasing chip-level power densities that are currently exacerbated

by device scaling, could help to improve battery life in mobile information

processing systems, and may operate in environments where transistor-based

logic and memory cannot.

For more information, see the project

page.

Non-Boolean Computer Architectures

Most information processing is Boolean. This paradigm has obviously and deservedly persisted due to Moore's Law scaling. However,

it widely expected that Moore's Law will face hard limits due to issues

such as device-to-device variation associated with further miniaturization,

power density requirements, etc.

An alternative approach is to process information in a non-Boolean fashion. Nature suggests that this is obviously a successful approach. The human brain works well at 30 W – and is capable of delivering the equivalent of 3e6 MIPS / W for certain applications. By comparison, a Dell Pentium 4 might operate at 200W and delivers just 5 MIPS / W [1]. Additionally, a commodity cellular nonlinear network (CNN)-based image processor with an area of 1.4 cm2 and a power budget of just 4.5 W can match the performance of the IBM cellular supercomputer – which has an area of ~7 m2 and a power budget of 491 kW [2]. While non-Boolean systems may not map well to general purpose processing, they are capable of solving problems that are expected to be commonplace in future information processing workloads (pattern matching, etc.) As such, investment in non-Boolean hardware both for standalone functionality and for hardware accelerators seems promising.

[1] Ralph Cavin, “Computation vis-à-vis Physics”, [2] Analogical and Neural Computing Lab, Budapest

For more information, see the project page.

Energy-efficient GPU-based Acceleration of Large Graph Algorithms on Heterogeneous Clusters

Solving basic graph problems such as Breadth-First Search (BFS) and All-Pair Shortest Path (APSP) with large data sets is crucial in many applications and also challenging to be accelerated by using GPUs. Some existing GPU implementations of graph algorithms with large data sets may even be slower than the latest CPU implementations. Furthermore, significant energy can be consumed due to the large data sets to be handled. This research aims to investigate approaches to improve performance and energy consumption of solving some basic graph problems. The focus will be on building a heterogeneous cluster comprised of high-performance Intel I7 processors, low-power Atom processors and high-end GPU cards to execute large graph algorithms at lower energy and lower cost than on homogeneous clusters.