Homework 04: Python Scripting

The goal of this homework assignment is to allow you to practice using Python to create scripts that require sophisticated parsing of data and manipulation of data structures. In this assignment, you will download data in both CSV and JSON format, process it, and then present it to the user in the terminal.

For this assignment, record your scripts and any responses to the following

activities in the homework04 folder of your assignments GitLab

repository and push your work by 11:59 PM Monday, February 25.

Activity 0: Preparation

Before starting this homework assignment, you should first perform a git

pull to retrieve any changes in your remote GitLab repository:

$ cd path/to/repository # Go to assignments repository $ git checkout master # Make sure we are in master branch $ git pull --rebase # Get any remote changes not present locally

Next, create a new branch for this assignment:

$ git checkout -b homework04 # Create homework04 branch and check it out

Once this is done, download the Makefile and test scripts:

# Go to homework04 folder $ cd homework04 # Download the Makefile $ curl -LOk https://gitlab.com/nd-cse-20289-sp19/cse-20289-sp19-assignments/raw/master/homework04/Makefile # Add and commit Makefile $ git add Makefile $ git commit -m "homework04: add Makefile" # Download the test scripts $ make test-scripts

Note, you do not need to add and commit the test scripts since the Makefile will automaticaly download them again whenever you run make.

You are now ready to work on the activities below.

Activity 1: CSE Demographics (5 Points)

One of the most pressing questions in Computer Science education is

addressing the lack of diversity in computing. In order to meanfully

examine this issue, we will first need to look at some raw data. For this

activity, you are to a script, demographics.py, that downloads, processes,

and visualizes the Computer Science and Engineering demographic data from

the classes of 2013 through 2021 and then answer some reflection

questions.

Background

For context and background material, you may wish to first read some of the following articles regarding gender and ethnic diversity in Computer Science and the technology industry in general:

Ethical and Professional Issues

This assignment is inspired by a discussion we have in the CSE 40175 Ethical and Professional Issues class, which is a required course for all Computer Science and Engineering students.

Demographic Data

Our beloved Ramzi has graciously provided us with the student demographic data for all Computer Science and Engineering students from the class of 2013 through 2021. This data looks something like this:

$ curl -sLk https://yld.me/raw/MtP | head -n 10 Year,Gender,Ethnic 2013,M,C 2014,M,C 2015,F,N 2016,F,N 2017,M,C 2018,M,C 2013,M,C 2014,M,S 2015,M,C

As can be seen above, the demographics CSV data has three columns:

-

The first column represents the graduating class year that student belongs to.

-

The second column records the gender of the student and contains either

MorFwhich stands forMaleorFemalerespectively. -

The third column records the ethnicity of the student and consists of the following mapping:

Letter Ethnicity C Caucasian O Asian S Hispanic B African American N Native American / Pacific Islanders T Multiple Selection U Undeclared

Task 1: demographics.py

The demographics.py script takes the following arguments:

# Display usage message $ ./demographics.py -h Usage: demographics.py [options] URL -y YEARS Which years to display (default: all) -p Display data as percentages. -G Do not include gender information. -E Do not include ethnic information.

The -y flag specifies which years to display (the default is to display all

the years in the CSV data).

The -p flag forces the script to output data as percentages rather than raw

counts.

The -G and -E flags suppresses outputting the gender and ethnic data

respectively.

The user may specify a URL to the CSV file. If one is not specified then

the script will use https://yld.me/raw/MtP as the

URL.

Note, the years are always displayed in ascending order.

Examples

Here are some examples of demographics.py in action:

# No arguments $ ./demographics.py 2013 2014 2015 2016 2017 2018 2019 2020 2021 ================================================================================ M 49 44 58 60 65 101 96 93 91 F 14 12 16 19 26 45 54 36 47 -------------------------------------------------------------------------------- B 3 2 4 1 5 3 3 4 6 C 43 43 47 53 60 107 96 87 94 N 1 1 1 7 5 5 13 12 14 O 7 5 9 9 12 10 13 10 8 S 7 4 10 9 3 13 10 9 10 T 2 1 1 0 6 8 15 6 5 U 0 0 2 0 0 0 0 1 1 -------------------------------------------------------------------------------- # Show percentages rather than raw counts $ ./demographics.py -p 2013 2014 2015 2016 2017 2018 2019 2020 2021 ================================================================================ M 77.8% 78.6% 78.4% 75.9% 71.4% 69.2% 64.0% 72.1% 65.9% F 22.2% 21.4% 21.6% 24.1% 28.6% 30.8% 36.0% 27.9% 34.1% -------------------------------------------------------------------------------- B 4.8% 3.6% 5.4% 1.3% 5.5% 2.1% 2.0% 3.1% 4.3% C 68.3% 76.8% 63.5% 67.1% 65.9% 73.3% 64.0% 67.4% 68.1% N 1.6% 1.8% 1.4% 8.9% 5.5% 3.4% 8.7% 9.3% 10.1% O 11.1% 8.9% 12.2% 11.4% 13.2% 6.8% 8.7% 7.8% 5.8% S 11.1% 7.1% 13.5% 11.4% 3.3% 8.9% 6.7% 7.0% 7.2% T 3.2% 1.8% 1.4% 0.0% 6.6% 5.5% 10.0% 4.7% 3.6% U 0.0% 0.0% 2.7% 0.0% 0.0% 0.0% 0.0% 0.8% 0.7% -------------------------------------------------------------------------------- # Show only gender percentages for the years 2019, 2020, and 2021 (with explicit URL) $ ./demographics.py -y 2019,2020,2021 -E -p https://yld.me/raw/MtP 2019 2020 2021 ================================ M 64.0% 72.1% 65.9% F 36.0% 27.9% 34.1% --------------------------------

Spacing and Formatting

Note, to pass the provided tests, you must match the spacing and formatting

exactly. Each column must be separated by the tab character (ie.

\t).

Skeleton

Here is a skeleton you can use to start your demographics.py script:

# Download demographics.py skeleton

$ curl -sLOk https://gitlab.com/nd-cse-20289-sp19/cse-20289-sp19-assignments/raw/master/homework04/demographics.py

It should look something like this:

#!/usr/bin/env python3 import collections import os import sys import requests # Globals URL = 'https://yld.me/raw/MtP' GENDERS = ('M', 'F') ETHNICS = ('B', 'C', 'N', 'O', 'S', 'T', 'U') # Functions def usage(status=0): ''' Display usage information and exit with specified status ''' print('''Usage: {} [options] URL -y YEARS Which years to display (default: all) -p Display data as percentages. -G Do not include gender information. -E Do not include ethnic information. '''.format(os.path.basename(sys.argv[0]))) sys.exit(status) def load_demo_data(url=URL): ''' Load demographics from specified URL into dictionary ''' return None def dump_demo_data(data, years=None, percent=False, gender=True, ethnic=True): ''' Dump demographics data for the specified years and attributes ''' pass def dump_demo_separator(years, char='='): ''' Dump demographics separator ''' pass def dump_demo_years(years): ''' Dump demographics years information ''' pass def dump_demo_fields(data, years, fields, percent=False): ''' Dump demographics information (for particular fields) ''' pass def dump_demo_gender(data, years, percent=False): ''' Dump demographics gender information ''' pass def dump_demo_ethnic(data, years, percent=False): ''' Dump demographics ethnic information ''' pass # Parse Command-line Options args = sys.argv[1:] while len(args) and args[0].startswith('-') and len(args[0]) > 1: arg = args.pop(0) # Main Execution

Hints

-

load_demo_data: To fetch the demographic data, you can use the requests.get method. You can then access the raw data via thetextproperty of the object returned by requests.get. You will need to think carefully about how you want organize the CSV into a dictionary. -

dump_demo_data: To display the table of data, this function should call thedump_demo_yearsfunction, followed by thedump_demo_genderanddump_demo_ethnicfunctions if necessary. -

dump_demo_separator: To display a separator line, this function should keep in mind that atabcharacter is always8spaces and consider multiplying thechar. -

dump_demo_years: To display the years, this function should use the str.join method on the list ofyearsalong with thedump_demo_separatorfunction. -

dump_demo_fields: To display all the rows for a given list offields, this function should build a row of text column by column. Special care must be taken to display percentages versus raw counts. -

dump_demo_gender: To display all the gender information, this function simply calls thedump_demo_fieldsanddump_demo_separatorfunctions. -

dump_demo_ethnic: To display all the ethnic information, this function simply calls thedump_demo_fieldsanddump_demo_separatorfunctions. -

While parsing command-line arguments, global variables corresponding to each flag should be set. These globals will later be used in the main execution.

-

The main execution should simply be calling the

load_demo_dataanddump_demo_datafunctions with the global variables set during command-line parsing.

Iterative and incremental development

Do not try to implement everything at once. Instead, approach this activity with the iterative and incremental development mindset and slowly build pieces of your application one feature at a time:

-

Parse the program arguments.

-

Fetch the data from the specified URL and organize into a dictionary.

-

Write the table header.

-

Write one table row based on a field.

-

Generalize writing table rows.

Remember that the goal at the end of each iteration is that you have a working program that successfully implements all of the features up to that point.

Focus on one thing at a time and feel free to write small test code to try things out.

Task 2: test_demographics.sh

To aid you in testing the demographics.py script, we have provided you with

test_demographics.sh, which you can use as follows:

$ ./test_demographics.sh Testing demographics.py ... Bad arguments ... Success -h ... Success No arguments ... Success MtP ... Success MtP -y 2013 ... Success MtP -y 2017,2021,2019 ... Success MtP -p ... Success MtP -G ... Success MtP -E ... Success ilG ... Success ilG -y 2016 -p ... Success ilG -y 2016 -p -E ... Success ilG -y 2016 -p -E -G ... Success Score 4.00

Task 3: README.md

In your README.md, briefly discuss the following questions:

-

What are the overall trends in the gender balance and ethnic diversity in the Computer Science and Engineering program at the University of Notre Dame? Is this surprising to you or not? Explain.

-

Does the Computer Science and Engineering department provide a welcoming and supportive learning environment to all students? In what ways can it improve? Explain.

Activity 2: Reddit (5 Points)

Katie likes to sit in the back of the class. It has its perks:

-

She can beat the rush out the door when class ends.

-

She can see everyone browsing Facebook, playing video games1, watching YouTube, or doing homework.

-

She feels safe from being called upon by the instructor... except when he does that strange thing where he goes around the class and tries to talk to people. Totally weird 2.

That said, sitting in the back has its downsides:

-

She can never see what the instructor is writing because he has terrible handwriting and always writes too small.

-

She is prone to falling asleep because the instructor is really boring and the class is not as interesting as Data Structures.

To combat her boredom, Katie typically just browses Reddit. Her favorite subreddits are AdviceAnimals, aww, todayilearned, and of course UnixPorn. Lately, however, Katie has grown paranoid that her web browser is leaking information about her3, and so she wants to be able to get the latest links from Reddit directly in her terminal.

Fortunately for Katie, Reddit provides a JSON feed for every subreddit.

You simply need to append .json to the end of each subreddit. For

instance, the JSON feed for todayilearned can be found here:

https://www.reddit.com/r/todayilearned/.json

To fetch that data, Katie uses the Requests package in Python to access the JSON data:

r = requests.get('https://www.reddit.com/r/todayilearned/.json') print(r.json())

429 Too Many Requests

Reddit tries to prevent bots from accessing its website too often. To work

around any 429: Too Many Requests errors, we can trick Reddit by

specifying our own user agent:

headers = {'user-agent': 'reddit-{}'.format(os.environ.get('USER', 'cse-20289-sp19'))} response = requests.get(url, headers=headers)

This should allow you to make requests without getting the dreaded 429 error.

The code above would output something like the following:

{"kind": "Listing", "data": {"modhash": "g8n3uwtdj363d5abd2cbdf61ed1aef6e2825c29dae8c9fa113", "children": [{"kind": "t3", "data": ...

Looking through that stream of text, Katie sees that the JSON data is a

collection of structured or hierarchical dictionaries and lists. This

looks a bit complex to her, so she wants you to help her complete the

reddit.py script which fetches the JSON data for a specified

subreddit or URL and allows the user to sort the articles by various

fields, restrict the number of items displayed, and even shorten the URLs

of each article.

Task 1: reddit.py

The reddit.py script takes the following arguments:

$ ./reddit.py Usage: reddit.py [options] URL_OR_SUBREDDIT -s Shorten URLs using (default: False) -n LIMIT Number of articles to display (default: 10) -o ORDERBY Field to sort articles by (default: score) -t TITLELEN Truncate title to specified length (default: 60)

The -s flag shortens URLs using the is.gd web service. This means

that long URLs are converted into shorter ones such as: https://is.gd/y0iqnn

The -n flag specifies the number of articles to display. By default, this is 10.

The -o flag specifies the Reddit article attribute to use for sorting the

articles. By default this should be score. Note, if the ORDERBY

parameter is score, then the articles should be ordered in descending

order. Otherwise, the articles should be ranked in ascending order.

The -t flag specifies the maximum length for the articles' titles. Titles

longer than this value should be truncated. The default value is 60.

Examples

Here are some examples of reddit.py in action:

# Show Linux subreddit $ ./reddit.py linux 1. Look who showed up at work today! (Score: 1052) https://i.redd.it/i4k4vz01u5g21.jpg 2. A proof that Unix utility sed is Turing complete (Score: 583) https://catonmat.net/proof-that-sed-is-turing-complete 3. We are Plasma Mobile developers, AMA (Score: 545) https://www.reddit.com/r/linux/comments/anspo5/we_are_plasma_mobile_developers_ama/ 4. [RELEASE] KDE Plasma 5.15: Lightweight, Usable and Productiv (Score: 479) https://www.kde.org/announcements/plasma-5.15.0.php 5. VoidLinux.eu Is Not Ours (Score: 169) https://voidlinux.org/news/2019/02/voidlinux-eu-gone.html 6. OpenSUSE did something awesome and it didn't get enough atte (Score: 167) https://www.reddit.com/r/linux/comments/apzdco/opensuse_did_something_awesome_and_it_didnt_get/ 7. Openrsync - OpenBSD releases its own rsync implementation (Score: 157) https://github.com/kristapsdz/openrsync/blob/master/README.md 8. I've made a Solitaire Game for your Terminal (Score: 148) https://gir.st/sol.htm 9. Vim Is Saving Me Hours of Work When Writing Books & Cour (Score: 124) https://nickjanetakis.com/blog/vim-is-saving-me-hours-of-work-when-writing-books-and-courses 10. Freedom EV: free/open replacement firmware for your electric (Score: 117) https://youtu.be/k7jbERL-Jd0 # Show top 5 nba articles $ ./reddit.py -n 5 nba 1. [Lewenberg] Jeremy Lin will be wearing No. 17 with the Rapto (Score: 10148) https://twitter.com/JLew1050/status/1095428759623069696 2. [Wojnarowski] Eleven months after hip surgery, Denver Nugget (Score: 10145) https://twitter.com/wojespn/status/1095751682569523200 3. [Sepkowitz] “I can’t imagine what they’re trying to block ou (Score: 4012) https://bleacherreport.com/articles/2820645-dangelo-russell-is-breaking-free 4. Knox with the dunk on Ben Simmons (Score: 3786) https://streamable.com/9bxws 5. [Wojnarowski] Enes Kanter has agreed to a deal with Portland (Score: 2836) https://twitter.com/wojespn/status/1095801612671614977 # Show top 2 aoe2 articles sorted by title $ ./reddit.py -n 2 -o title aoe2 1. AI and Civ differences (Score: 2) https://www.reddit.com/r/aoe2/comments/aq5g83/ai_and_civ_differences/ 2. Back to the game since the 90s/2000s (Score: 2) https://www.reddit.com/r/aoe2/comments/aq7vzd/back_to_the_game_since_the_90s2000s/ # Show top 2 unixporn articles sorted by score (with shorten URLs) $ ./reddit.py -n 2 -o score -s unixporn 1. [Flurry] I know you like tiling managers and i want to show (Score: 1672) https://is.gd/VF8NQN 2. [i3] RickArch (Score: 660) https://is.gd/FwWO8w

We'll do it live

Note, since we are pulling data from an active website, the articles may change between runs.

Skeleton

Here is a skeleton you can use to start your reddit.py script:

# Download reddit.py skeleton

$ curl -sLOk https://gitlab.com/nd-cse-20289-sp19/cse-20289-sp19-assignments/raw/master/homework04/reddit.py

It should look something like this:

#!/usr/bin/env python3 import os import sys import requests # Globals URL = None ISGD_URL = 'http://is.gd/create.php' # Functions def usage(status=0): ''' Display usage information and exit with specified status ''' print('''Usage: {} [options] URL_OR_SUBREDDIT -s Shorten URLs using (default: False) -n LIMIT Number of articles to display (default: 10) -o ORDERBY Field to sort articles by (default: score) -t TITLELEN Truncate title to specified length (default: 60) '''.format(os.path.basename(sys.argv[0]))) sys.exit(status) def load_reddit_data(url=URL): ''' Load reddit data from specified URL into dictionary ''' pass def dump_reddit_data(data, limit=10, orderby='score', titlelen=60, shorten=False): ''' Dump reddit data based on specified attributes ''' pass def shorten_url(url): ''' Shorten URL using yld.me ''' pass # Parse Command-line Options args = sys.argv[1:] while len(args) and args[0].startswith('-') and len(args[0]) > 1: arg = args.pop(0) # Main Execution

Hints

-

load_reddit_data: To fetch the Reddit data, you can use the requests.get method. You can then access the JSON data via thejson()method of the object returned by requests.get. You will need to think carefully about what portion of the JSON you actually want to utilize. -

dump_reddit_data: To display the articles, this function can use the sorted function with either thereverseorkeyparameter to order the articles. It can then slice the resulting articles to limit the number of things displayed.Note, a

tabcharacter is used to separate theindexand thetitleand to indent theurl. -

shorten_url: To shorten a URL, you can use the requests.get method to make a request to the is.gd webservice with the appropriateparamsas shown below:requests.get(ISGD_URL, params={'format': 'json', 'url': url})

You will need to parse the result of this request to extract the shortened URL.

-

While parsing command-line arguments, global variables corresponding to each flag should be set. These globals will later be used in the main execution.

-

The main execution should simply be calling the

load_reddit_dataanddump_reddit_datafunctions with the global variables set during command-line parsing.

Task 2: test_reddit.sh

To aid you in testing the reddit.py script, we have provided you with

test_reddit.sh, which you can use as follows:

$ ./test_reddit.sh Testing reddit.py ... No arguments ... Success Bad arguments ... Success -h ... Success linuxactionshow ... Success linuxactionshow (-n 1) ... Success linuxactionshow (-o url) ... Success linuxactionshow (-t 10) ... Success linuxactionshow (-s) ... Success voidlinux ... Success voidlinux (-n 5) ... Success voidlinux (-n 5 -o title) ... Success voidlinux (-n 5 -o url -t 20) ... Success voidlinux (-n 2 -o score -t 40 -s) ... Success Score 4.00

Note, we chose an inactive subreddit, r/linuxactionshow for our test script since it should not change. However, it is possible that it may change4 and thus break our test script. Just let the staff know and adjustments can be made.

Task 3: README.md

In your README.md, briefly discuss the following:

-

Compare scripting in Python to scripting in shell. What are the advantages and disadvantages of either language? Could you have written this program in shell? Would you have wanted to? Explain.

-

Compare scripting in Python to programming in C++. What are the advantages and disadvantages of either language? Could you have written this program in C++? Would you have wanted to? Explain.

Guru Point (1 Point)

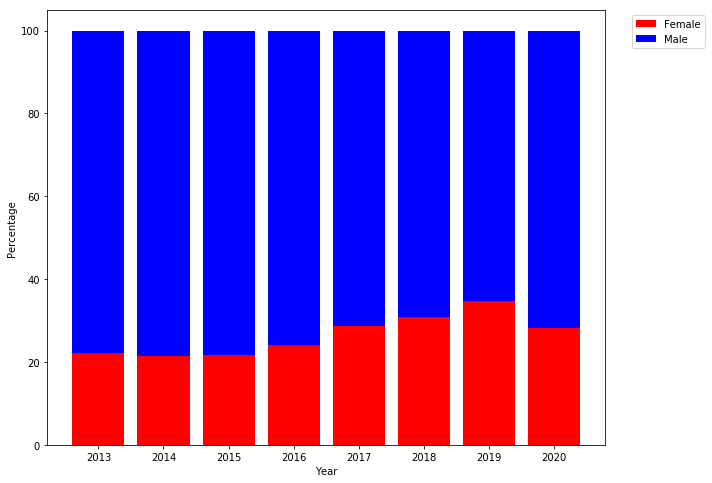

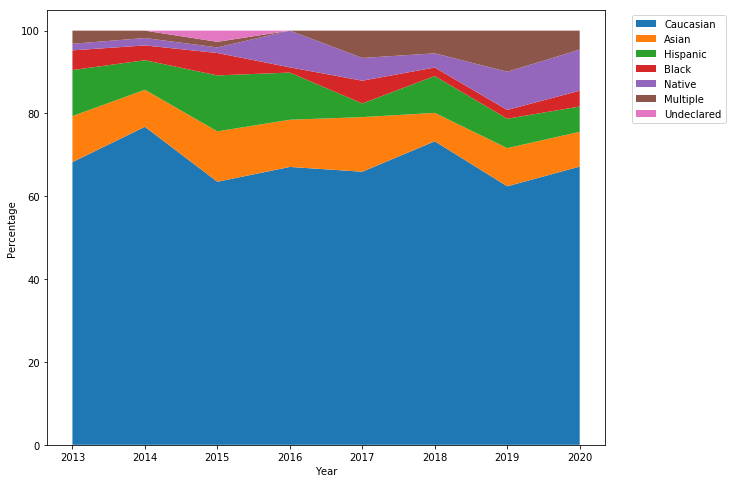

For extra credit, you are to visualize the CSE demographics data above as graphical plots using Python and matplotlib inside a Jupyter notebook. You should produce graphs like this (these are from last year):

To run Jupyter on the student machines:, you can use the following command:

$ jupyter-notebook-3.6 --ip studentXX.cse.nd.edu --port 9000 --no-browser

Replace studentXX.cse.nd.edu with the name of the student machine you are

on. Also, you may need to change the port to something else between 9000 -

9999.

To get credit, you must show either a TA or the instructor a demonstration of your Jupyter notebook with the code and visualization.

Feedback

If you have any questions, comments, or concerns regarding the course, please

provide your feedback at the end of your README.md.

Submission

To submit your assignment, please commit your work to the homework04 folder

of your homework04 branch in your assignments GitLab repository:

#-------------------------------------------------- # BE SURE TO DO THE PREPARATION STEPS IN ACTIVITY 0 #-------------------------------------------------- $ cd homework04 # Go to Homework 04 directory ... $ $EDITOR demographics.py # Edit script $ git add demographics.py # Mark changes for commit $ git commit -m "homework04: activity 1" # Record changes ... $ $EDITOR reddit.py # Edit script $ git add reddit.py # Mark changes for commit $ git commit -m "homework04: activity 2" # Record changes ... $ $EDITOR README.md # Mark changes for commit $ git add README.md # Mark changes for commit $ git commit -m "homework04: README" # Record changes $ git push -u origin homework04 # Push branch to GitLab

Remember to create a merge request and assign the appropriate TA from the Reading 05 TA List.