Project 02: Process Queue

Overview

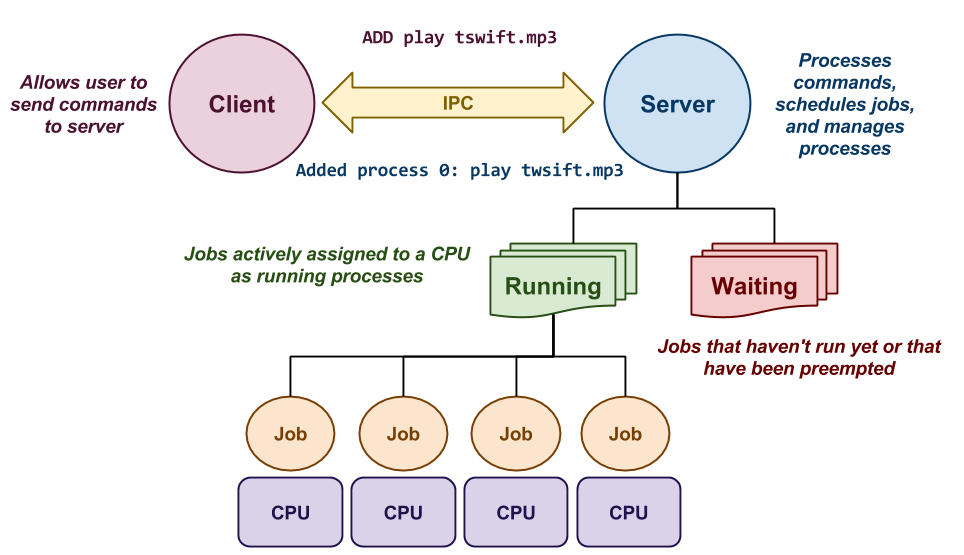

The second project is to build a multi-core process queue called pq,

whose purpose is to receive a series of jobs and then schedule them to run

on multiple CPU cores as separate processes.

This process queue has two components as shown above:

-

Client: This component allows the user to send the following commands to the server:

-

Add: This adds a command to the waiting queue on the server.

-

Status: This retrieves and displays the status of the queue.

-

Running: This retrieves and displays just the running jobs in the queue.

-

Waiting: This retrieves and displays just the waiting jobs in the queue.

-

Flush: This removes all the jobs from the queue (and terminates any active processes).

-

-

Server: This component maintains of a queue of running jobs (ie. tasks that actively assigned CPU time), and waiting jobs (ie. tasks that haven't started yet or have been preempted). Its purpose is to schedule as many running jobs as there are CPU cores using one of the three scheduling policies mentioned below.

The number of

CORESand the schedulingPOLICYcan be specified by the user when the server is started, but cannot change once it is active. By default, the server assumes one core and the FIFO scheduling policy.

Working in groups of one, two, or three people, you are to

create this pq application by midnight on Friday, September 21,

2018. More details about this project and your deliverables are

described below.

Balancing the Load

While it is unlikely that you will write an operating system scheduler in your career, you may need to implement or at least interact with things like load balancers or batch queues, especially in parallel or distributed environments such as the cloud.

For instance, many websites use Cloudflare which performs load balance to prevent denial-of-service attacks. Similarly, we have used HTCondor in the past to schedule many jobs on the distributed computing cluster at Notre Dame. In both of these situations some decision making is required to map jobs to a particular set of resources.

This project will allow to explore the challenges in this type of decision making by having you implementing and test different types of scheduling policies for a process queue.

Protocol

To communicate, the client and server must use a common IPC mechanism; in this case, we will be using Unix domain sockets.

The communication will be a simple text-based request and respond protocol that works as follows:

| Client Requests | Example | Server Responds | Example |

|---|---|---|---|

| ADD command | ADD play tswift.mp3 | Reports added process | Added process 0: play tswift.mp3 |

| STATUS | STATUS | Summary of sizes of runnning and waiting queues, average turnaround and response times, and the jobs in all queues. |

Running = 1, Waiting = 8, Levels = 0, Turnaround = 00.10, Response = 00.00

Running Queue:

PID COMMAND STATE USER THRESHOLD USAGE ARRIVAL START

2309 ./worksim.py 0.1 1 Sleeping 100 0 20.75 1505531908 1505531909

Waiting Queue:

PID COMMAND STATE USER THRESHOLD USAGE ARRIVAL START

0 ./worksim.py 0.1 1 Sleeping 0 0 0.00 1505531908 0

0 ./worksim.py 0.5 1 Sleeping 0 0 0.00 1505531908 0

0 ./worksim.py 0.5 1 Sleeping 0 0 0.00 1505531908 0

0 ./worksim.py 0.5 1 Sleeping 0 0 0.00 1505531908 0

0 ./worksim.py 0.9 1 Sleeping 0 0 0.00 1505531908 0

0 ./worksim.py 0.9 1 Sleeping 0 0 0.00 1505531908 0

0 ./worksim.py 0.9 1 Sleeping 0 0 0.00 1505531908 0

0 ./worksim.py 0.9 1 Sleeping 0 0 0.00 1505531908 0

|

| RUNNING | RUNNING | Lists all jobs in running queue. |

PID COMMAND STATE USER THRESHOLD USAGE ARRIVAL START

2309 ./worksim.py 0.1 1 Sleeping 100 0 20.75 1505531908 1505531909

|

| WAITING | WAITING | Lists all jobs in waiting queue. |

PID COMMAND STATE USER THRESHOLD USAGE ARRIVAL START

0 ./worksim.py 0.1 1 Sleeping 0 0 0.00 1505531908 0

0 ./worksim.py 0.5 1 Sleeping 0 0 0.00 1505531908 0

0 ./worksim.py 0.5 1 Sleeping 0 0 0.00 1505531908 0

0 ./worksim.py 0.5 1 Sleeping 0 0 0.00 1505531908 0

0 ./worksim.py 0.9 1 Sleeping 0 0 0.00 1505531908 0

0 ./worksim.py 0.9 1 Sleeping 0 0 0.00 1505531908 0

0 ./worksim.py 0.9 1 Sleeping 0 0 0.00 1505531908 0

0 ./worksim.py 0.9 1 Sleeping 0 0 0.00 1505531908 0

|

| FLUSH | FLUSH | Removes all jobs from all queues (terminates any that are active or suspended). |

Flushed 1 running and 8 waiting processes

|

Each message should be terminated with a newline character (ie. \n).

Likewise, the server only needs to process one command per client and

only needs to handle one client at a time. The client should echo the

response it receives from the server to stdout.

Scheduling

The order in which jobs should be executed is determined by one of the following scheduling policies:

-

FIFO: Execute jobs in the order in each they arrival in the process queue.

-

Round Robin: Execute jobs in a round robin fashion.

-

Multi-Level Feedback Queue: Execute jobs based on a dynamic priority structure.

You must implement all three scheduling disciplines along with any accounting and preemption mechanisms required to build these algorithms.

Pseudo-Solutions

This project relates directly to our discussion on schedulers. Refer to your textbook, Operating Systems: Three Easy Pieces and the slides on scheduling: Scheduling (FIFO, Round Robin) and Scheduling (MLFQ, Lottery).

Usage

The full set of pq command-line options are show below:

$ ./bin/pq -h

Usage: ./bin/pq [options]

General Options:

-h Print this help message

-f PATH Path to IPC channel

Client Options:

add COMMAND Add COMMAND to queue

status Query status of queue

running Query running jobs

waiting Query waiting jobs

flush Remove all jobs from queue

Server Options:

-n NCPUS Number of CPUs

-p POLICY Scheduling policy (fifo, rdrn, mlfq)

-t MICROSECONDS Time between scheduling

For instance, to start the server with 4 cores and with the Round Robin

scheduling policy, we would run:

$ ./bin/pq -n 4 -p rdrn

To add the command echo DOING_IT_FOR_THE_MONEY to the queue, we would use

the client as follows:

$ ./bin/pq add echo DOING_IT_FOR_THE_MONEY

Reference Implementation

A reference implementation of pq can be found on AFS:

/afs/nd.edu/user15/pbui/pub/bin/pq

Feel free to play around with this in order to explore how pq

should behave.

Note, you cannot create Unix domain sockets on an AFS mount. Therefor you will need to pass in a path to the socket:

$ /afs/nd.edu/user15/pbui/pub/bin/pq -f /tmp/pq.$USER

That will run the reference implemenation with a socket located at /tmp/pq.$USER.

Traces

Here are some example traces of pq in action:

FIFO

FIFO: Server

FIFO: Client

Round Robin

Round Robin: Server

Round Robin: Client

Multi-Level Feedback Queue

Multi-Level Feedback Queue: Server

Multi-Level Feedback Queue: Client

Deliverables

As noted above, you are to work in groups of one or two (three

is permitted, but discouraged) to implement pq. You must use (C99) (not

C++) as the implementation language for pq itself, while any test

scripts are to be written in any reasonable scripting language..

Timeline

Here is a timeline of events related to this project:

| Date | Event |

|---|---|

| Tuesday, September 11 | Project description and repository are available. |

| Friday, September 21 | Code is due (pushed to GitLab to master branch). |

| Friday, September 28 | Demonstrations of pq must be completed. |

Repository

To start this project, one group member must fork the Project 02 repository on GitLab:

https://gitlab.com/nd-cse-30341-fa18/cse-30341-fa18-project02

Once this repository has been forked, follow the instructions from Reading 00 to:

-

Make the repository private.

-

Configure access to the repository.

Make sure you add all the members of the team in addition to the instructional staff.

Source Code

As you can see, the base Project 02 repository contains a README.md file

and the following folder hierarchy:

project02

\_ Makefile # This is the project Makefile

\_ bin # This contains the executables

\_ include # This contains the header files

\_ lib # This contains the libraries

\_ src # This contains the C99 source code

\_ tests # This contains test scripts and source code

\_ workloads # This conatins workload benchmarking scripts

You must maintain this folder structure for your project and place files in their appropriate place.

K.I.S.S.

While the exact organization of the project code is up to you, keep in mind that you will be graded in part on coding style, cleaniness, and organization. This means your code should be consistently formatted, not contain any dead code, have reasonable comments, and appropriate naming among other things:

-

Break long functions into smaller functions.

-

Make sure each function does one thing and does it well.

-

Abstract, but don't over do it.

Please refer to these Coding Style slides for some tips and guidelines on coding style expectations.

Compiling

To help you get started, we have provided a Makefile that will build the

pq application for you:

$ make # Build pq executable

Compiling src/process.o

Compiling src/process_list.o

Compiling src/signal.o

Compiling src/socket.o

Compiling src/scheduler.o

Compiling src/scheduler_internal.o

Compiling src/scheduler_fifo.o

Compiling src/scheduler_rdrn.o

Compiling src/scheduler_mlfq.o

Compiling src/server.o

Compiling src/client.o

Linking lib/libpq.a

Linking bin/pq

Running

Once you have the pq executable, you can run either the client or server

by specifing the appropriate commands:

$ ./bin/pq -f /tmp/pq.$USER -p fifo # Run server with FIFO policy $ ./bin/pq -f /tmp/pq.$USER add sleep 10 # Run client add command

To help you benchmark your process queue, the bin/worksim.py is

provided to you. This script is a workload simulator and uou can use

it to create a job that simulates a job with a particular I/O workload and

duration. For example, to create a job that is 30% I/O and 70% compute

that runs for 10 seconds, you can run the following:

$ ./bin/worksim.py 0.3 10

If you wish to suppress the debugging messages from worksim.py you can

set the NDEBUG environmental variable to 1 as follows:

$ NDEBUG=1 ./bin/worksim.py 0.3 10

This script will be useful for testing and benchmarking the different scheduling policies.

Implementation

All of the C99 header files are in the include/pq folder while the

C99 source code is contained in the src directory. To help you get

started, parts of the project are already implemented:

[x] client.c # PQ client (implemented) [x] pq.c # PQ main executable (implemented) [ ] process.c # PQ process structure (not implemented) [ ] process_list.c # PQ process list structure (not implemented) [ ] scheduler.c # PQ scheduler structure (not implemented) [ ] scheduler_fifo.c # PQ FIFO scheduler policy (not implemented) [ ] scheduler_internal.c # PQ internal scheduler functions (not implemented) [ ] scheduler_mlfq.c # PQ MLFQ scheduler policy (not implemented) [ ] scheduler_rdrn.c # PQ Round Robin scheduler policy (not implemented) [ ] server.c # PQ server (not implemented) [x] signal.c # PQ signal handlers (implemented) [x] socket.c # PQ sockets (implemented)

Basically, the main pq executable, socket, signal, and client code are

provided to you, but the server, scheduler, and process management

functions are not. Each of the functions in the incomplete files above

have comments that describe what needs to be done.

You will need to examine these source files and complete the implementation

of the server and scheduler. To do so, you will first need to implement a

basic Process structure and a corresponding ProcessList structure:

-

process.c: This structure is used to keep track of a process in the system (reference atinclude/pq/process.h). Most of the functions are straight-forward exceptprocess_poll. The purpose of this function is to read information from/proc/<pid>/statand update some of theProcessstructure's internal accounting. See How do I get the total CPU usage of an application from /proc/pid/stat? for a description of how this might be done. -

process_list.c: This structure is used to track a list or queue orProcessstructures. To enable efficient operations to the front and back of the list, the structure maintains pointers to both theheadandtailof the queue. Additionally, it has asizeattribute that records the number of items in the queue at all times. -

scheduler.c: This contains the main functions required for the server and basically just dispatches to other internal functions. -

scheduler_internal.c: This contains internal scheduler functions, mostly corresponding to the IPC commands such asADD,STATUS,RUNNING,WAITING,LEVELS, andFLUSH. -

scheduler_{fifo,mlfq,rdrn}.c: These contain the implementations to the corresponding scheduling disciplines. -

server.c: This contains the server implementation, which is outlined in the source code.

Testing

As part of the initial starter code, there are a few testing resources.

First, there is tests/test_process.c and tests/test_process_list.c.

These compile to programs that allow you test the core parts of the

Process and ProcessList structures. It is recommended that you get

these parts working before proceeding to any other part of the projects.

Likewise, there is a tests/test_ipc.sh that will allow you to quickly

test the client/server communication of your pq implementation. It also

provides an example of how you might wish to script or automate the pq system.

Furthermore, we have include a set of scripts in the workloads directory

that can be used to benchmark and test your scheduling policies. These are

non-exhaustive and only serve as examples of the sort of things you can do

to probe and examine your pq implementation.

If necessary, you should not hesitate in create your own additional test programs or scripts to facilitate verification and debugging.

Demonstration

As part of your grade, you will need perform a series of benchmarks on your

implementation of pq and then present these results face-to-face

to a TA where you will demonstrate the correctness of your

implementation and to discuss the results of the project.

Benchmarks

Once you have completed your implementation of pq, you must perform a

series of benchmarks that answer the following questions:

-

What type of workloads are best for FIFO? Round Robin? Multi-Level Feedback Queue? That is, where does each shine and where does each falter?

-

How well does each scheduling policy perform when we have multiple cores and many processes (

> 100) in the queue? -

How well does each scheduling policy perform when jobs arrive in the queue at different time intervals?

To answer these questions, you will need to write some scripts, collect some data, and make some graphs. How you do this is up to you.

Workload Scripts

As noted above, the Project 02 repository includes a set of workload scripts that you may use to test and benchmark your implementation. That said, to get all the data you need to answer the questions above, you will need to either augment these scripts or create your own.

Presentation

As part of your demonstration, you must provide a Google Drive

presentation (between 5 - 10 slides) with the following content:

-

Testing: A short discussion of how the correctness of the implementation was verified.

-

Benchmarking: A short description of what you benchmarked and how you benchmarked, including graphs and tables that show the results of your benchmarking.

-

Analysis: Answers to the questions above in light of your results.

-

Summary: Describe what you learned.

Note, you must incorporate images, graphs, diagrams and other visual elements as part of your presentation where reasonable.

Be prepared to be asked about different aspects of your project, as the TA may ask you questions to probe your understanding of the material and your work.

To arrange your demonstration time, please complete the form below:

Documentation

As noted above, the Project 02 repository includes a README.md file

with the following sections:

-

Members: This should be a list of the project members (names and email).

-

Demonstration: This is where you should provide a Google Drive link to your demonstration slides.

-

Errata: This is a section where you can describe any deficiencies or known problems with your implementation.

You must complete this document report as part of your project.

Extra credit

Once you have completed your project, you many extend your implementation

of pq by performing either (or both) of the following modifications:

-

Protocol: In the current protocol for

pq, the server only handles one command per client request. Modify the server to handle multiple commands from a single client. This will require implementing a new protocol where the client can send a message to the server, receive a result, and then send another message without having to reconnect. -

Multiple Clients: In the current project description, the server only needs to be able to handle one client at a time. Modify the server so it can communicate with multiple clients at once.

Each of these modifications is worth 1 Point of extra credit each.

Grading

Your project will be graded on the following metrics:

| Metric | Points |

|---|---|

Source Code

|

18.0

|

Demonstration

|

5.0

|

Documentation

|

1.0

|

Memory Correctness, Error Handling, Compiler Warnings

In addition to meeting the functional requirements of the project (as described above), your program must not exhibit any memory leaks or invalid memory accesses as would be detected by Valgrind.

Additionally, because [system calls] can fail, your program must implement robust error handling and ensure your code checks the status of [system calls] when reasonable.

Moreover, you code must compile without any warnings (and -Wall must

be one of the CFLAGS).