This Is Not The Course Website You Are Looking For

This course website is from a previous semester. If you are currently in the class, please make sure you are viewing the latest course website instead of this old one.

Project: Thor and Spidey

The goal of this project is to allow you to practice using low-level system calls related to sockets and networking. To do this, you will create two new programs:

-

thor: This is a basic HTTP client that will hammer a remote HTTP server by making multiple requests. -

spidey: This is a basic HTTP server that supports directory listings, static files, and CGI scripts.

Once you have these programs, you will conduct experiments using thor

to test the latency and throughput of spidey. You will then need to

make a presentation about your project and demonstrate your code and

discuss your results directly to a TA or the instructor.

For this project, you are to work in groups of 1 or 2 students and record your source code and any responses to a new project GitLab repository. You must push your work to your GitLab repository by midnight, Wednesday, April 29, 2020.

Activity 0: GitLab Repository

Because you will be working in groups, you will need to fork and clone a new project repository:

https://gitlab.com/nd-cse-20289-sp20/cse-20289-sp20-project

To do this, you should follow the same instructions from Reading 00 (except adjust for the different repository location). Besure to do the following:

-

Make your project repository is private.

-

Give the instructional staff and your group members developer access to your project repository.

-

Record your group members in the Members section of the

README.md.

Note: You should only have one repository per group.

Note: MAKE SURE YOUR REPOSITORY IS PRIVATE.

Cloning Problems

If you get errors while cloning along the lines of "fatal: unable to write

sha1 file", it is because the NFS server is still having issues. There

are two straightforward workarounds:

-

Try another student machine.

-

Clone to

/tmpand thenmvthe repository to yourHOMEdirectory.

Either way, please send a message to csehelp@nd.edu and let them know about the problem you experienced (which machine and what command you were trying).

Once forked, you should [clone] your repository to a local machine. Inside

the project folder, you should have the following files:

project

\_ Makefile # This is the Makefile for building all the project artifacts

\_ README.md # This is the README file for recording your responses

\_ bin # This conatins the scripts and binary executables

\_ test_spidey.sh # This is the Spidey test script

\_ test_thor.sh # This is the Thor test script

\_ thor.py # This is the Python script for the HTTP client

\_ include # This contains the C99 header files

\_ spidey.h # This is the C99 header file for the project

\_ src # This contains the C99 source files

\_ forking.c # This is the C99 implementation file for the forking mode

\_ handler.c # This is the C99 implementation file for the handler functions

\_ request.c # This is the C99 implementation file for the request functions

\_ single.c # This is the C99 implementation file for the single mode

\_ socket.c # This is the C99 implementation file for the socket functions

\_ spidey.c # This is the C99 implementation file for the main execution

\_ utils.c # This is the C99 implementation file for various utility functions

\_ www # This is the default web root directory

\_ song.txt # This is an example text document

\_ html # This is a folder for HTML documents

\_ index.html # This is an example HTML document

\_ images # This is a folder for image files

\_ a.png # This is an example image file

\_ b.jpg # This is an example image file

\_ c.jpg # This is an example image file

\_ d.png # This is an example image file

\_ scripts # This is a folder for CGI scripts

\_ cowsay.sh # This is an example CGI script (Shell)

\_ env.sh # This is an example CGI script (Shell)

\_ hello.py # This is an example CGI script (Python)

\_ text # This is a folder for text documents

\_ hackers.txt # This is an example text document

\_ lyrics.txt # This is an example text document

\_ pass # This is a sub-folder for text documents

\_ fail # This is an example text document

The details on what you need to implement are described in the following sections.

Frequently Asked Questions

Activity 1: Thor

The first program is bin/thor.py, which is a basic HTTP client similar to

curl or wget that supports the following features:

-

Supports utilizing multiple processes using the concurrent.futures package.

-

Performs multiple requests per process.

-

Computes the elapsed times for each HTTP request.

Overview

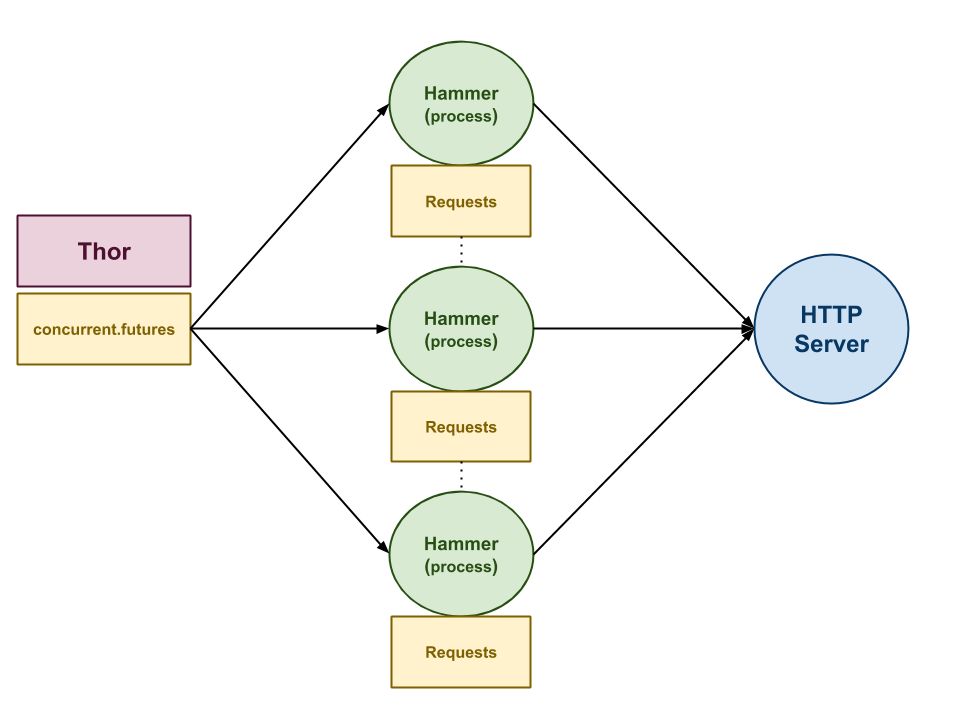

As shown above, bin/thor.py uses the concurrent.futures module to start

multiple processes (ie. HAMMERS). Each process than makes multiple

HTTP requests (ie. THROWS) using the requests module. Each HTTP

request is timed using the time module and the elapsed time is displayed.

Likewise, the average elapsed time for each process is also displayed, along

with the average elapsed time for all the requests across all processes.

Usage

Given a URL, bin/thor.py uses the HTTP protocol to fetch the contents

of the URL. The -t flag sets the number of throws (ie. HTTP requests)

to be made per process (default is 1), while -h sets the number of

hammers (ie. processes) to execute in parallel (default is 1). The -v

flag forces the program to dump the contents of the URL to standard

output.

# Display help message $ ./bin/thor.py Usage: thor.py [-h HAMMERS -t THROWS] URL -h HAMMERS Number of hammers to utilize (1) -t THROWS Number of throws per hammer (1) -v Display verbose output

Examples

Below are some examples of bin/thor.py in action:

Single Throw

# Perform single throw $ ./bin/thor.py http://example.com Hammer: 0, Throw: 0, Elapsed Time: 0.03 Hammer: 0, AVERAGE , Elapsed Time: 0.03 TOTAL AVERAGE ELAPSED TIME: 0.03

Multiple Throws

# Perform 10 throws $ ./bin/thor.py -t 10 http://example.com Hammer: 0, Throw: 0, Elapsed Time: 0.03 Hammer: 0, Throw: 1, Elapsed Time: 0.03 Hammer: 0, Throw: 2, Elapsed Time: 0.03 Hammer: 0, Throw: 3, Elapsed Time: 0.03 Hammer: 0, Throw: 4, Elapsed Time: 0.03 Hammer: 0, Throw: 5, Elapsed Time: 0.03 Hammer: 0, Throw: 6, Elapsed Time: 0.03 Hammer: 0, Throw: 7, Elapsed Time: 0.03 Hammer: 0, Throw: 8, Elapsed Time: 0.03 Hammer: 0, Throw: 9, Elapsed Time: 0.03 Hammer: 0, AVERAGE , Elapsed Time: 0.03 TOTAL AVERAGE ELAPSED TIME: 0.03

Multiple Throws with Multiple Hammers

# Perform 5 throws requests with 2 hammers $ ./bin/thor.py -t 5 -h 2 http://example.com Hammer: 1, Throw: 0, Elapsed Time: 0.03 Hammer: 0, Throw: 0, Elapsed Time: 0.03 Hammer: 1, Throw: 1, Elapsed Time: 0.03 Hammer: 0, Throw: 1, Elapsed Time: 0.03 Hammer: 1, Throw: 2, Elapsed Time: 0.03 Hammer: 0, Throw: 2, Elapsed Time: 0.03 Hammer: 1, Throw: 3, Elapsed Time: 0.03 Hammer: 0, Throw: 3, Elapsed Time: 0.03 Hammer: 1, Throw: 4, Elapsed Time: 0.03 Hammer: 1, AVERAGE , Elapsed Time: 0.03 Hammer: 0, Throw: 4, Elapsed Time: 0.03 Hammer: 0, AVERAGE , Elapsed Time: 0.03 TOTAL AVERAGE ELAPSED TIME: 0.03

Single Throw with Verbose Output

# Perform single throw with verbose output $ ./bin/thor.py -v http://example.com <!doctype html> <html> <head> <title>Example Domain</title> <meta charset="utf-8" /> <meta http-equiv="Content-type" content="text/html; charset=utf-8" /> <meta name="viewport" content="width=device-width, initial-scale=1" /> <style type="text/css"> body { background-color: #f0f0f2; margin: 0; padding: 0; font-family: -apple-system, system-ui, BlinkMacSystemFont, "Segoe UI", "Open Sans", "Helvetica Neue", Helvetica, Arial, sans-serif; } div { width: 600px; margin: 5em auto; padding: 2em; background-color: #fdfdff; border-radius: 0.5em; box-shadow: 2px 3px 7px 2px rgba(0,0,0,0.02); } a:link, a:visited { color: #38488f; text-decoration: none; } @media (max-width: 700px) { div { margin: 0 auto; width: auto; } } </style> </head> <body> <div> <h1>Example Domain</h1> <p>This domain is for use in illustrative examples in documents. You may use this domain in literature without prior coordination or asking for permission.</p> <p><a href="https://www.iana.org/domains/example">More information...</a></p> </div> </body> </html> Hammer: 0, Throw: 0, Elapsed Time: 0.03 Hammer: 0, AVERAGE , Elapsed Time: 0.03 TOTAL AVERAGE ELAPSED TIME: 0.03

Hints

-

Parse the command line options to set the

hammers,throws, andverbosevariables. -

Use concurrent.futures.ProcessPoolExecutor to create a pool of processes.

-

Use the

mapmethod of the ProcessPoolExecutor to have each process execute thedo_hammerfunction on a sequence of arguments. -

The

hammermethod should perform multiple HTTPgetrequests by performing requests.get on theURL. Each request should be timed using time.time and the average elapsed request time should be returned by this function.

Activity 2: Spidey

The second program is bin/spidey, which is a basic HTTP server similar to

Apache or NGINX that supports the following features:

-

Executing in either single connection mode or forking mode

-

Displaying directory listings

-

Serving static files

-

Running CGI scripts

-

Showing error messages

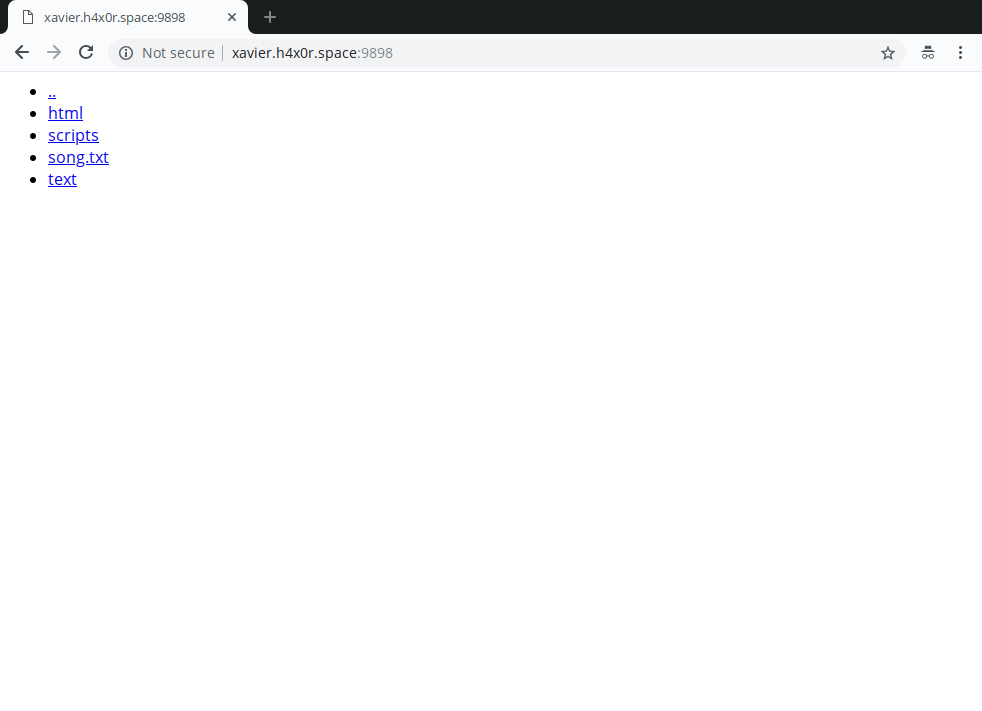

An example of spidey in action can be found at:

weasel.h4x0r.space:9898.

Overview

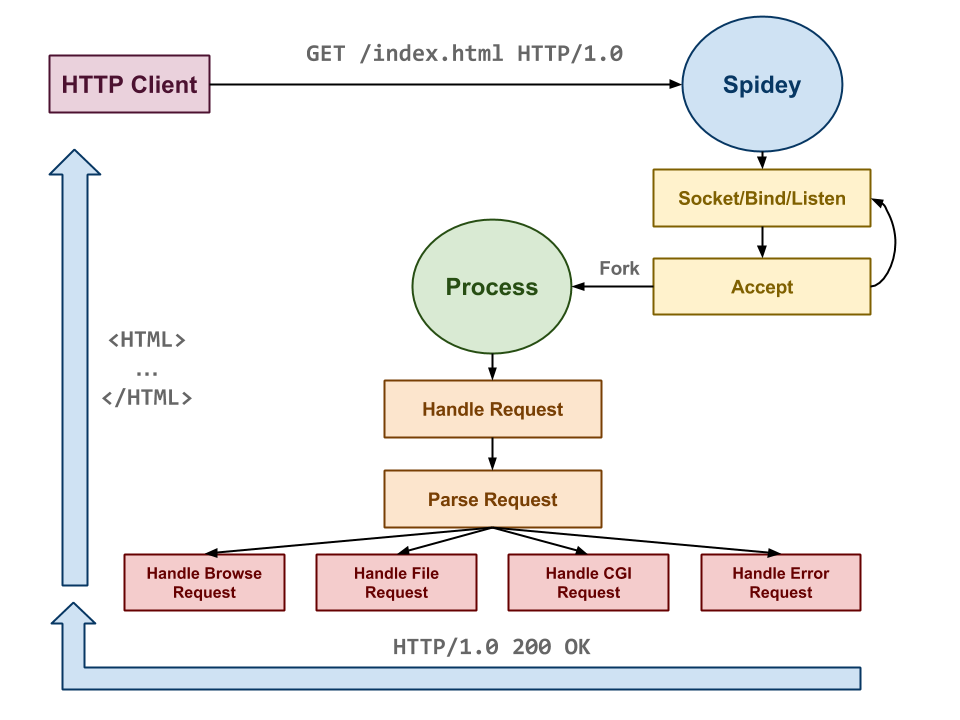

Overall, the implementation of a HTTP server is straightforward:

-

First, we allocate a server socket, bind it to a port, and then listen for incoming connections.

-

Next, we accept an incoming client connection and parse the input data stream into a HTTP

requeststructure. -

Based on the request's parameters, we then form a response and send it back to the client.

-

Continue to perform steps

2and3for as long as the server is running. If we are in forking mode, then we simply fork after we accept a connection and let the child process handle parsing and responding to the request. Otherwise, we simply handle one client at a time in single connection mode.

Usage

When executed, bin/spidey opens a socket on the PORT specified by the

-p flag (default is 9898) and handles HTTP requests for files in the

path directory specified by the -r flag (default is the www folder in

the current directory). If -c forking is specified, then bin/spidey will

fork a child process for each incoming client request. The user may also

set the default mimetype for files via the -M flag and set the path to

the mime.types file via the -m flag (default is /etc/mime.types).

# Display help message $ ./bin/spidey -h Usage: ./spidey [hcmMpr] Options: -h Display help message -c mode Single or Forking mode -m path Path to mimetypes file -M mimetype Default mimetype -p port Port to listen on -r path Root directory

Examples

Below are some examples of spidey in action:

Single Connection Mode (with defaults)

$ ./bin/spidey [ 3734] LOG spidey.c:86 Listening on port 9898 [ 3734] DEBUG spidey.c:87 RootPath = /home/pbui/src/teaching/cse.20289.sp20/project.pbui/www [ 3734] DEBUG spidey.c:88 MimeTypesPath = /etc/mime.types [ 3734] DEBUG spidey.c:89 DefaultMimeType = text/plain [ 3734] DEBUG spidey.c:90 ConcurrencyMode = Single [ 3734] LOG request.c:61 Accepted request from 10.63.12.82:45320 [ 3734] DEBUG request.c:181 HTTP METHOD: GET [ 3734] DEBUG request.c:182 HTTP URI: / [ 3734] DEBUG request.c:183 HTTP QUERY: [ 3734] DEBUG request.c:255 HTTP HEADER Host = xavier.h4x0r.space:9898 [ 3734] DEBUG request.c:255 HTTP HEADER User-Agent = Mozilla/5.0 (X11; Linux x86_64; rv:45.0) Gecko/20100101 Firefox/45.0 [ 3734] DEBUG request.c:255 HTTP HEADER Accept = text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8 [ 3734] DEBUG request.c:255 HTTP HEADER Accept-Language = en-US,en;q=0.5 [ 3734] DEBUG request.c:255 HTTP HEADER Accept-Encoding = gzip, deflate [ 3734] DEBUG request.c:255 HTTP HEADER Connection = keep-alive [ 3734] DEBUG handler.c:39 HTTP REQUEST PATH: /home/pbui/src/teaching/cse.20289.sp20/project.pbui/www [ 3734] DEBUG handler.c:47 HTTP REQUEST TYPE: BROWSE [ 3734] LOG handler.c:64 HTTP REQUEST STATUS: 200 OK

Forking Connection Mode (with defaults)

$ ./bin/spidey -c forking [ 3764] LOG spidey.c:86 Listening on port 9898 [ 3764] DEBUG spidey.c:87 RootPath = /home/pbui/src/teaching/cse.20289.sp20/project.pbui/www [ 3764] DEBUG spidey.c:88 MimeTypesPath = /etc/mime.types [ 3764] DEBUG spidey.c:89 DefaultMimeType = text/plain [ 3764] DEBUG spidey.c:90 ConcurrencyMode = Forking [ 3764] LOG request.c:61 Accepted request from 10.63.12.82:45324 [ 3765] DEBUG forking.c:42 Child process: 3765 [ 3765] DEBUG request.c:181 HTTP METHOD: GET [ 3765] DEBUG request.c:182 HTTP URI: / [ 3765] DEBUG request.c:183 HTTP QUERY: [ 3765] DEBUG request.c:255 HTTP HEADER Host = xavier.h4x0r.space:9898 [ 3765] DEBUG request.c:255 HTTP HEADER User-Agent = Mozilla/5.0 (X11; Linux x86_64; rv:45.0) Gecko/20100101 Firefox/45.0 [ 3765] DEBUG request.c:255 HTTP HEADER Accept = text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8 [ 3765] DEBUG request.c:255 HTTP HEADER Accept-Language = en-US,en;q=0.5 [ 3765] DEBUG request.c:255 HTTP HEADER Accept-Encoding = gzip, deflate [ 3765] DEBUG request.c:255 HTTP HEADER Connection = keep-alive [ 3765] DEBUG request.c:255 HTTP HEADER Cache-Control = max-age=0 [ 3765] DEBUG handler.c:39 HTTP REQUEST PATH: /home/pbui/src/teaching/cse.20289.sp20/project.pbui/www [ 3765] DEBUG handler.c:47 HTTP REQUEST TYPE: BROWSE [ 3765] LOG handler.c:64 HTTP REQUEST STATUS: 200 OK

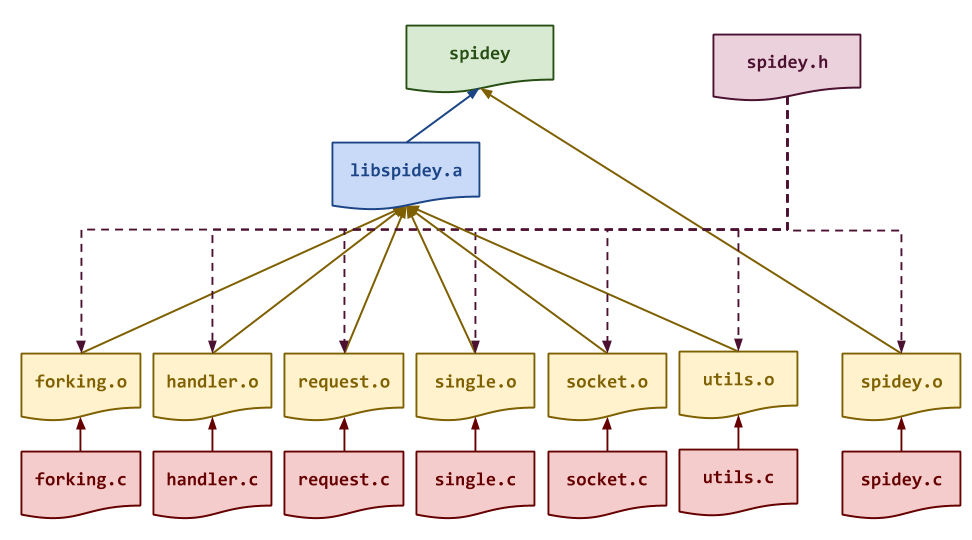

Building

As usual, the Makefile contains all the rules or recipes for building the

project artifacts (e.g. bin/spidey, etc.). Although the provided

Makefile contains most of the variable definitions and clean recipe, you

must add the appropriate rules for bin/spidey and any intermediate objects.

The dependencies for these targets are shown in the DAG below:

Makefile Variables

You must use the CC, CFLAGS, LD, LDFLAGS, AR and ARFLAGS

variables when appropriate in your rules.

Once you have a working Makefile, you should be able to run the following

commands:

# Build spidey $ make Compiling src/spidey.o... Compiling src/forking.o... Compiling src/handler.o... Compiling src/request.o... Compiling src/single.o... Compiling src/socket.o... Compiling src/utils.o... Linking lib/libspidey.a... Linking bin/spidey... # Simulate build with tracing output $ make -n echo Compiling src/spidey.o... gcc -g -Wall -Werror -std=gnu99 -Iinclude -c -o src/spidey.o src/spidey.c echo Compiling src/forking.o... gcc -g -Wall -Werror -std=gnu99 -Iinclude -c -o src/forking.o src/forking.c echo Compiling src/handler.o... gcc -g -Wall -Werror -std=gnu99 -Iinclude -c -o src/handler.o src/handler.c echo Compiling src/request.o... gcc -g -Wall -Werror -std=gnu99 -Iinclude -c -o src/request.o src/request.c echo Compiling src/single.o... gcc -g -Wall -Werror -std=gnu99 -Iinclude -c -o src/single.o src/single.c echo Compiling src/socket.o... gcc -g -Wall -Werror -std=gnu99 -Iinclude -c -o src/socket.o src/socket.c echo Compiling src/utils.o... gcc -g -Wall -Werror -std=gnu99 -Iinclude -c -o src/utils.o src/utils.c echo Linking lib/libspidey.a... ar rcs lib/libspidey.a src/forking.o src/handler.o src/request.o src/single.o src/socket.o src/utils.o echo Linking bin/spidey... gcc -L. -o bin/spidey src/spidey.o lib/libspidey.a

Depending on your compiler, you may see some warnings with the initial starter code.

Memory Issues

Your program must also be free of memory issues such as invalid memory

accesses and memory leaks. Use valgrind to verify the correctness of

your program:

$ valgrind --leak-check=full ./bin/spidey

Be sure to check using different command line arguments as well to ensure you verify all code paths.

Sockets

To implement part 1, you must implement the socket_listen function in

the socket.c source file. You may use echo_server_forking.c as

inspiration.

HTTP Requests

To implement part 2, you must implement the accept_request,

free_request, parse_request, parse_request_method, and

parse_request_headers functions in the request.c source file. These

functions are used to accept in incoming client connection and to parse the

request into a struct with the following fields:

typedef struct { int fd; /*< Client socket file descriptor */ FILE *stream; /*< Client socket file stream */ char *method; /*< HTTP method */ char *uri; /*< HTTP URI */ char *path; /*< Real path corresponding to URI and RootPath */ char *query; /*< HTTP query string */ char host[NI_MAXHOST]; /*< Host name of client */ char port[NI_MAXHOST]; /*< Port number of client */ Header *headers; /*< List of name, value Header pairs */ } Request;

For example, suppose a client connected to an instance of spidey from

127.0.0.1:54321 and requested the URI: /script.cgi?q=monkeys using the

following HTTP request:

GET /script.cgi?q=monkeys HTTP/1.0 Host: xavier.h4x0r.space:9898

The request struct would then contain the following information such as:

method = GET

uri = /script.cgi

path = /tmp/spidey/www/script.cgi

query = q=monkeys

host = 127.0.0.1

port = 54321

headers = [{Host: xavier.h4x0r.space:9898}]

HTTP Response

To implement part 3, you must implement handle_request,

handle_browse_request, handle_file_request, and handle_error. The first

function is used to analyze the request and then dispatch the appropriate

handler:

-

handle_browse_request: The requested URI is a directory, and so the server will list all the contents of the directory in lexicographical order.

-

handle_cgi_request: The requested URI is an executable file, and so the server will execute the file as a CGI script and send the output of the script to the client.

-

handle_file_request: The requested URI is a readable file, and so the server will simply open and read the specified file and send it to the client.

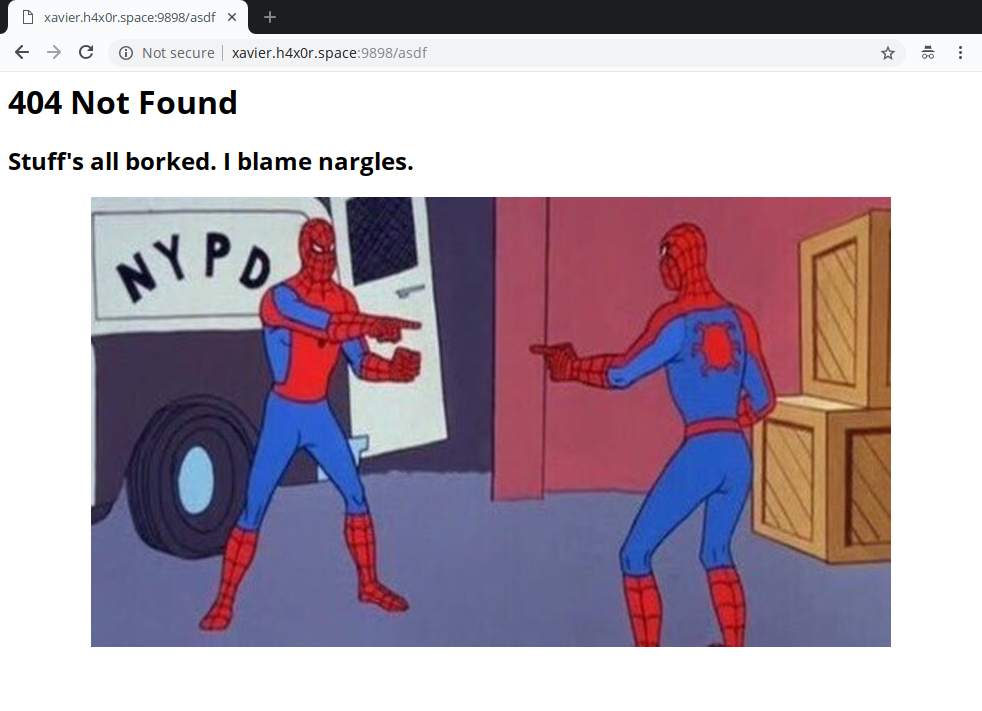

-

handle_error: There was an error in the request or in processing the request, so send the user an hilarious and vague error message. Although HTTP has quite a few HTTP status codes, we are only concerned with a handful:200,400,404, and500.

To implement these functions, you will also need to implement the

determine_mimetype, determine_request_path, and http_status_string

functions in utils.c. Details about these functions can be found in the

associated code skeletons.

In general, a valid HTTP response looks like this:

HTTP/1.0 200 OK Content-Type: text/html <html> ... </html>

The first line contains the HTTP status code for the request. The second

line instructs the client (i.e. web browser) what type of data to expect

(i.e. mimetype). Each of these lines should be terminated with \r\n.

Additionally, it is important that after the Content-Type line you include

a blank line consisting of only \r\n. Most web clients will expect this

blank line before parsing for the actual content.

CGI

To enable CGI scripts to receive input from the HTTP client, we must pass

the request parameters to the CGI script via environmental variables. A

list of such variables can be found

here and

here. For this

project, we are mainly concerned with: DOCUMENT_ROOT, QUERY_STRING,

REMOTE_ADDR, REMOTE_PORT, REQUEST_METHOD, REQUEST_URI, SCRIPT_FILENAME,

SERVER_PORT, HTTP_HOST, HTTP_ACCEPT, HTTP_ACCEPT_LANGUAGE,

HTTP_ACCEPT_ENCODING, HTTP_CONNECTION, and HTTP_USER_AGENT.

Our strategy for implementing CGI is to simply export the variables to the environment and then call popen on the script.

Two example CGI scripts are provided for you to test in the starter code directory.

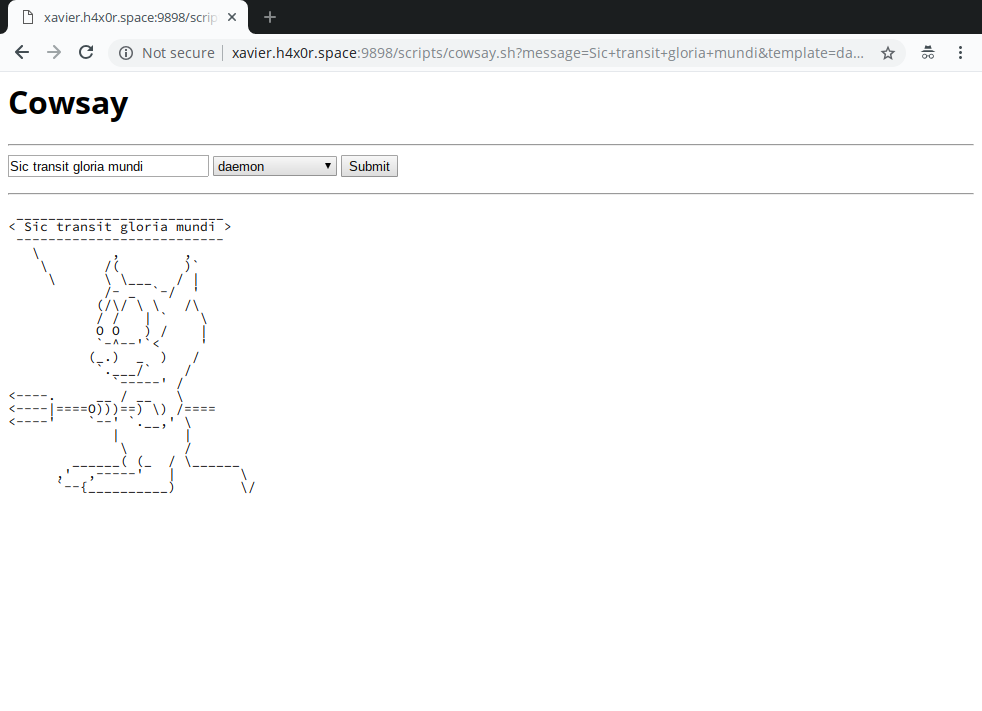

Cowsay

If your cowsay.sh CGI script doesn't work, it could be because cowsay

is not your PATH. To fix this, add the following to the top of

cowsay.sh:

export PATH=/escnfs/home/pbui/pub/pkgsrc/bin:$PATH

Concurrency Modes

As noted above, spidey supports a single connection mode (the default), and

a forking mode. In single mode, one connection is handled at a time and thus

there is no concurrency. In forking mode, a child process is forked after

accepting a request and used to handle the request, thus allowing for

multiple requests to be processed at the same time.

Because of the architecture of the spidey project, we only need to

implement a high-level dispatch function for each of the modes and do not

need to re-write any of the handlers or request functions.

Logging

To help you debug and monitor your webserver, you should use the log and

debug macros liberally to record what is happening in your server. For

instance, consider the following types of events:

- Establishing a connection

- Detecting a disconnection

- Determining a request type

- Any failures (opening a file, allocating a resource, etc.)

Some of starter code already contains log and debug calls and you should

follow that example. Basically if knowing this information is useful in

debugging or monitoring the system, then you should log it!

Plan of Attack

-

Study the code base first before writing any code.

-

Work on the code incrementally and slowly complete each feature one-at-a-time.

Hints: socket.c

socket_listenshould resemble what we did in echo_server_forking.c and utilize socket, bind, and listen.

Hints: request.c

accept_request

-

You can use getnameinfo to lookup client information.

-

You can use fdopen to open a socket stream.

-

Remember that the headers in the

Requeststruct are connected in a singly-linked list.

parse_request_method

-

You can use

skip_whitespacein conjunction with strtok to parse the method and URI. -

You can use strchr to help you parse the query from the URI.

-

You should use strdup to store strings in the

requeststruct.

parse_request_headers

Hints: handler.c

handle_request

-

This is just a dispatch function that uses

parse_request,determine_request_path, and stat and accessto determine which handler to call. -

Use

handle_errorwith an appropriate HTTP status code in case of an error situation.

handle_browse_request

handle_file_request

-

You should use the

determine_mimetypeto get the content type. -

Be sure to print the HTTP header before you print the contents of the file.

handle_cgi_request

-

You should use setenv to export the CGI environment variables.

-

You should use popen to execute the script.

-

You should use fgets and fputs to read the output of the script and send it to the socket stream.

handle_error

-

You should use

http_status_stringto translate thehttp_statusto a string. -

Be sure to print the HTTP header before you print the contents of the error message.

Hints: single.c

- You should use

accept_request,handle_requestandfree_request.

Hints: forking.c

Hints: spidey.c

Hints: utils.c

determine_mimetype

-

You should use strrchr to find the file extension.

-

You should use fopen, fgets, strtok,

skip_whitespace, andstreqto search for the matching file extention.

determine_request_path

-

The real path is a combination of the

RootPathand theuri. -

You should use realpath to determine the absolute path.

-

You should use strcmp to check the path.

http_status_string

- You should return strings of the appropriate HTTP status codes.

skip_nonwhitespace

- You simply need to advance the pointer and return that pointer.

skip_whitespace

- You simply need to advance the pointer and return that pointer.

Error Handling

Your program should check if system calls or functions fail and handle those situations accordingly.

Activity 3: Experiments

Once you have completed both thor.py and spidey, you are to conduct

expermients that answer the following questions:

-

What is the average latency of

spideyin single connection vs forking mode for: directory listings, static files, CGI scripts? -

What is the average throughput of

spideyin single connection vs forking mode for: small static files (1KB), medium static files (1MB), and large static files (1GB)?

For each question, you must determine how you want to explore the question

and how you wish to use thor.py to test spidey.

You should create shell scripts to automate running your experiments multiple times to generate a reasonable amount of data.

Static Files

DO NOT commit your test data, particularly the medium and large static files to your project [git] repository. If you do, everyone in your group will be angry (and so will the instructional staff).

Different Machines

To get reasonable times, be sure that you have the client and server running on different machines.

Guru Point: Web Programming (3 Points)

For extra credit, you are to extend your spidey HTTP server to do any of

the following:

-

Use Bootstrap to make directory listings and error pages more visually pleasing.

-

Display thumbnails for images in directory listings.

-

Write a guest book CGI script that allows users to add entries to a running message board.

-

Write a survey or personality test CGI script.

-

Write a multiple-choice quiz CGI script of the topic of your choice.

To receive credit (up to three different options), you must show a TA or the instructor your code and a demonstration of it in action (include it in your project video).

Guru Point: Virtual Private Server (1 Point)

For extra extra credit, you are to sign up for virtual private server on a

service such as Digital Ocean, Linode, Amazon Web Services, Microsoft

Azure, Google Cloud, or Vultr and run your spidey HTTP server from

that server.

To receive credit, you must have the spidey run in the background as a

service on your virtual private server and then show a TA or the instructor

your spidey running in the cloud (include it in your project video).

Grading

Your project will be graded on the following metrics:

| Metric | Points |

|---|---|

Source Code

|

21.00

|

Demonstration

|

5.00

|

Commit History

To encourage you to work on the project regularly (rather than leaving it until the last second) and to practice performing incremental development, part of your grade will be based on whether or not you have regular and reasonably sized commits in your [git] log.

That is, you will lose a point if you commit everything at once near the project deadline.

Demonstration

As part of your project, you will need to create a short video (ie. 4 -

6 minutes in length) that accomplishes the following:

-

Summarizes what was accomplished in the project (ie. what worked and what didn't work) and how the work was divided among the group members.

-

Demonstrates the correctness of both

thor.pyandspideyby using the scripts described below. -

Describes how your group went about measuring the average latency of the different types of requests. You must have a table or diagram produced by your experiments that you analyze and explain.

-

Describes how your group went about measuring the average throughput of the different file sizes. You must have a table or diagram produced by your experiments that you analyze and explain.

-

Discuss the results of your experiments and explain why you received the results you did. What are the advantages and disadvantages to the forking model?

-

Wraps up the video by describing what you learned from not only the experiments but also the course as a whole.

Note, you must incorporate images, graphs, diagrams and other visual elements as part of your presentation where reasonable.

Please upload this video to Google Drive or YouTube and place a link in

your README.md.

Scripts

To demonstrate your project, you will need to download and execute the following scripts:

# Download latest thor test script $ curl -sL https://gitlab.com/nd-cse-20289-sp20/cse-20289-sp20-project/raw/master/bin/test_thor.sh > bin/test_thor.sh $ chmod +x bin/test_thor.sh # Execute thor test script $ ./bin/test_thor.sh Testing bin/thor.py... Functions ... Success Usage no arguments ... Success bad arguments ... Success Single Hammer https://example.com ... Success https://example.com (-v) ... Success https://yld.me ... Success https://yld.me (-v) ... Success https://yld.me/izE?raw=1 ... Success https://yld.me/izE?raw=1 (-v) ... Success Single Hammer, Multiple Throws https://example.com (-t 4) ... Success https://example.com (-t 4 -v) ... Success https://yld.me (-t 4) ... Success https://yld.me (-t 4 -v) ... Success https://yld.me/izE?raw=1 (-t 4) ... Success https://yld.me/izE?raw=1 (-t 4 -v) ... Success Multiple Hammers https://example.com (-h 2) ... Success https://example.com (-h 2 -v) ... Success https://yld.me (-h 2) ... Success https://yld.me (-h 2 -v) ... Success https://yld.me/izE?raw=1 (-h 2) ... Success https://yld.me/izE?raw=1 (-p 2 -v) ... Success Multiple Hammers, Multiple Throws https://example.com (-h 2 -t 4) ... Success https://example.com (-h 2 -t 4 -v) ... Success https://yld.me (-h 2 -t 4) ... Success https://yld.me (-h 2 -t 4 -v) ... Success https://yld.me/izE?raw=1 (-h 2 -t 4) ... Success https://yld.me/izE?raw=1 (-h 2 -t 4 -v) ... Success

Demonstrate: Thor

You will need execute the test_thor.sh script to demonstate that your

HTTP client works correctly.

Inconsistent Failures

Because the test_thor.sh script hits real servers, it is quite possible

that some requests might fail, which will then throw off the test script.

For this project, you are not required to handle these failures in any

particular way, so just be aware that if the test_thor.sh script

inconsistently reports failures (particularly the multiprocessing cases),

then it might have to do with the response from the servers and not your own

script.

As long as you can demonstrate at least one successful run of the

test_thor.sh script, you will be given full credit.

# Download latest spidey test script $ curl -sL https://gitlab.com/nd-cse-20289-sp20/cse-20289-sp20-project/raw/master/bin/test_spidey.sh > bin/test_spidey.sh $ chmod +x bin/test_spidey.sh # Execute spidey test script $ ./bin/test_spidey.sh _________________________________________________________________________________ / On another machine, please run: \ | | | valgrind --leak-check=full ./bin/spidey -r ~pbui/pub/www -p PORT -c MODE | | | | - Where PORT is a number between 9000 - 9999 | | | \ - Where MODE is either single or forking / --------------------------------------------------------------------------------- \ ^__^ \ (oo)\_______ (__)\ )\/\ ||----w | || || Server Host: weasel.h4x0r.space Server Port: 9898 Testing spidey server on weasel.h4x0r.space:9898 ... Handle Browse Requests ... / ... Success /html ... Success /images ... Success /scripts ... Success /text ... Success /text/pass ... Success Handle File Requests ... /html/index.html ... Success /images/a.png ... Success /images/b.jpg ... Success /images/c.jpg ... Success /images/d.png ... Success /text/hackers.txt ... Success /text/lyrics.txt ... Success /text/pass/fail ... Success /song.txt ... Success Handle CGI Requests ... /scripts/env.sh ... Success /scripts/cowsay.sh ... Success /scripts/cowsay.sh?message=hi ... Success /scripts/cowsay.sh?message=hi&template=vader ... Success /scripts/hello.py ... Success /scripts/hello.py?user=pparker ... Success Handle Errors ... /asdf ... Success Bad Request ... Success Bad Headers ... Success

Demonstrate: Spidey

You will need execute the test_spidey.sh script in both single and forking

mode to demonstate that your HTTP server works correctly.

Testing Spidey

Note, with the test_spidey.sh script above, you want to make sure you enter

the host where your spidey is running (not weasel.h4x0r.space).

If you wish to test individual URLs, remember that you can always use curl:

$ curl -v http://HOST:PORT

Where HOST and PORT are the hostname and port number of your spidey

server.

Documentation

As noted above, the project repository includes a README.md file with the

following sections:

-

Members: This should be a list of the project members.

-

Demonstration: This is where you should provide a link to your demonstration video.

-

Errata: This is a section where you can describe any deficiencies or known problems with your implementation.

-

Contributions: This is where you should enumerate the individual contributions of each group member.

Note: You must complete this document report as part of your project.

Deliverables

To submit your assignment, please commit your work your project

repository on GitLab. Your project folder should only contain at the

following files:

wwwand all the files in this folderMakefileREADME.mdbin/thor.pyinclude/spidey.hsrc/forking.csrc/handler.csrc/request.csrc/single.csrc/socket.csrc/spidey.csrc/utils.c

Note: You must submit your project deliverables (source code and video demonstration) before midnight, Wednesday, April 29, 2020.