Biometrics: The Eye of the Beholder Simply put, biometrics is the use of physical or biological characteristics to identify people, with or without their knowledge. Fingerprinting is one of the oldest and most reliable forms of biometrics. Although research in this field has been ongoing for several years, the events of September 11 stimulated a biometrics boom. Concerns about the safety of people in public places, specifically airports, promises new life to many security consulting firms and personal identification systems manufacturers. With the increased federal and state interest there is also increased funding of a variety of human recognition technology research projects, from radar imaging of body cavities to recognizing an individual’s iris from 50 yards away. Supported by grants from the Defense Department’s Defense Advanced Research Projects Agency, Kevin W. Bowyer, Schubmehl-Prein Chair of Computer Science and Engineering, and Associate Professor Patrick J. Flynn have begun assembling a database of faces in the Computer Vision Research Laboratory in order to develop new theories and image-based identification systems. “ It’s unfortunate, but true,” says Flynn, “that since September 11, if you have a product that can be used to identify people or threats, it is very easy to get noticed in a security context. Some of those companies may be selling physical presence and not necessarily valid systems.” Flynn suggests that it is not that all the systems are faulty but that they have not yet been thoroughly tested. “ There is also a pressing need for databases of faces, head shapes, and infrared images of people to serve in the assessment of such systems and characterization of their performance,” says Flynn. To that end he and Bowyer have been recording infrared and invisible light pictures of approximately 70 students each week. They’ve been doing it for the past two semesters and will continue taking photos for another year. According to Bowyer, the idea is to capture and define an individual’s face mathematically using digital and infrared cameras and turning the photos into a string of numbers. The numbers could then be compared against other “strings” in the inventory and the information used by researchers to develop more reliable face-recognition systems.  The drawback in face-recognition technology has been that a person’s face can change from day to day. In fact the difference between the same individual on two different days may be more prominent than the differences between two different people. So what’s the answer? Bowyer and Flynn believe that an effective system will eventually rely on a combination of technologies, including infrared systems. “We may never be able to identify a specific individual, but there is every reason to believe that, using several types of images together, we will be able to identify whether a person is nervous, wearing a disguise, or otherwise suspicious.” |

Bioinformatics “The interface of computer technologies and biology is going to have a huge impact on society,” says Danny Chen, Rooney Associate Professor of Computer Science and Engineering. “The opportunities for medical researchers and computer engineers and scientists to work together to solve real-life problems is tremendous. For example, I have been studying robotics, more specifically mapping a path for a robot to follow, one based on the parameters of a specific environment. The same methods that allow me to determine a path for the kinds of motions a robot needs to make to maneuver in its environment, without causing harm or being damaged itself, are applicable in a variety of medical applications.” Today when a surgeon begins planning for a specific operation, he or she might review an X-ray or other images of the body. Individually, those often prove inconclusive. “What we’re working on,” says Chen, “is a human body tissue map, a topographical image that shows tissues, organs, tumors, and their positions relative to one another.” A body tissue map allows a surgeon to evaluate all options -- the costs, consequences, and benefits of a particular path he or she may follow in an actual surgical procedure.  This is especially helpful when dealing with radiation therapy. When

physicians identify a tumor site and want to bombard it with radiation,

one of their most important tasks is to avoid damaging organs and critical

tissues. Chen’s mapping paths, which identify safe routes for a

number of individual radioactive beams to enter the body from different

directions, have shown promise as a way to minimize the energy and amount

of radiation to normal tissues while concentrating the combined power

of all the beams on the tumor. “Our studies to date have been very

encouraging, but we are still evaluating this approach. If we find strong

evidence that our method out-performs the current methods, then we will

begin more strenuous clinical experiments in conjunction with our collaborator,

Cedric Yu, the director of Medical Physics at the University of Maryland

Medical Center in Baltimore.” This is especially helpful when dealing with radiation therapy. When

physicians identify a tumor site and want to bombard it with radiation,

one of their most important tasks is to avoid damaging organs and critical

tissues. Chen’s mapping paths, which identify safe routes for a

number of individual radioactive beams to enter the body from different

directions, have shown promise as a way to minimize the energy and amount

of radiation to normal tissues while concentrating the combined power

of all the beams on the tumor. “Our studies to date have been very

encouraging, but we are still evaluating this approach. If we find strong

evidence that our method out-performs the current methods, then we will

begin more strenuous clinical experiments in conjunction with our collaborator,

Cedric Yu, the director of Medical Physics at the University of Maryland

Medical Center in Baltimore.” Computational biology, biocomplexity, artificial life, agent based modeling … these

are all terms defining computer simulations of complex physical phenomena,

from molecules to societies to entire ecosystems. “Biocomplexity,” says

Gregory R. Madey, associate professor of computer science and engineering, “studies

large numbers of diverse things and how they interact. Rather than developing

a lot of equations and trying to predict what’s going to happen

-- which is difficult mathematically when the items to be studied are

all different -- we build a model of the system and let it behave as

it would in real life.” One of Madey’s current projects involves

a collaboration with Patricia A. Maurice, associate professor of civil

engineering and geological sciences. They are modeling natural organic

matter, which plays a vital role in ecological and biogeochemical processes.

Two graduate students and two undergraduates work with Madey and Maurice

on the project. They are studying ecosystem function, the global carbon

cycle, and the quantitative aspects of organic carbon transfer in the

environment. Computational biology, biocomplexity, artificial life, agent based modeling … these

are all terms defining computer simulations of complex physical phenomena,

from molecules to societies to entire ecosystems. “Biocomplexity,” says

Gregory R. Madey, associate professor of computer science and engineering, “studies

large numbers of diverse things and how they interact. Rather than developing

a lot of equations and trying to predict what’s going to happen

-- which is difficult mathematically when the items to be studied are

all different -- we build a model of the system and let it behave as

it would in real life.” One of Madey’s current projects involves

a collaboration with Patricia A. Maurice, associate professor of civil

engineering and geological sciences. They are modeling natural organic

matter, which plays a vital role in ecological and biogeochemical processes.

Two graduate students and two undergraduates work with Madey and Maurice

on the project. They are studying ecosystem function, the global carbon

cycle, and the quantitative aspects of organic carbon transfer in the

environment.  Jesus A. Izaguirre, assistant professor of computer science and engineering,

is also part of the biocomplexity initiative at the University, which

is directed by the Interdisciplinary Center for the Study of

Biocomplexity. Working with James A. Glazier, associate professor of physics, and Mark

S. Alber, professor of mathematics, Izaguirre is modeling the development

of avian limbs. “We’re not trying to engineer a better chicken

wing, although that might have interesting commercial applications,” says

Izaguirre. “The purpose of the project is to determine the physical

properties of cells and the tissue of a limb bud as it grows so we can

develop a computer model of the process.” Izaguirre believes that

the medical and scientific implications of the project are very exciting

and may lead to a better understanding of malformation and other diseases

associated with development. Jesus A. Izaguirre, assistant professor of computer science and engineering,

is also part of the biocomplexity initiative at the University, which

is directed by the Interdisciplinary Center for the Study of

Biocomplexity. Working with James A. Glazier, associate professor of physics, and Mark

S. Alber, professor of mathematics, Izaguirre is modeling the development

of avian limbs. “We’re not trying to engineer a better chicken

wing, although that might have interesting commercial applications,” says

Izaguirre. “The purpose of the project is to determine the physical

properties of cells and the tissue of a limb bud as it grows so we can

develop a computer model of the process.” Izaguirre believes that

the medical and scientific implications of the project are very exciting

and may lead to a better understanding of malformation and other diseases

associated with development. Izaguirre also models large biological molecules such as protein, DNA, and estrogen receptors. Simulating the behavior of these types of molecules and how they interact as part of a drug, a disease process, or metabolic function can help pharmaceutical engineers design new and more effective drugs. For example, he is working with Martin P. Tenniswood, Coleman Foundation Professor of Biological Sciences, to model the effectiveness of anti-breast cancer drugs. “If you have a molecular model,” he says, “you can understand the mechanisms and determine the effectiveness of specific drug.” |

|

|

|

The Interdisciplinary Center for the Study of Biocomplexity Comprised of researchers throughout the University, the Interdisciplinary Center for the Study of Biocomplexity focuses on the unique, yet complex, structures and behaviors of biological entities -- such as molecules, cells, or organisms -- and the variety of spatial and temporal relationships that arise from the interaction between such entities. DEPARTMENTS Aerospace and Mechanical Engineering Glen L. Niebur, assistant professor Biological Sciences Crislyn D’Souza-Schorey, assistant professor Edward H. Hinchcliffe, assistant professor Kevin T. Vaughan, assistant professor Computer Science and Engineering Danny Chen, Rooney associate professor Jesus A. Izaguirre, assistant professor Gregory R. Madey, associate professor Chemical Engineering Andre F. Palmer, assistant professor Chemistry and Biochemistry Brian M. Baker, assistant professor Holly V. Goodson, assistant professor Mathematics Mark S. Alber, professor Bei Hu, professor Physics Albert-László Barabási, Emil T. Hofman professor James A. Glazier, associate professor Gerald L. Jones, professor FACILITIES Laboratory for Computational Life Sciences http://www.nd.edu/~icsb |

|

| The Trade-offs in

Natural and Artificial Life Just as with biological beings, there are trade-offs in artificial life systems. “If you want a universal, very adaptive agent,” says, Matthias J. Scheutz, assistant professor of computer science and engineering, “then you need a sophisticated control system that can manage the complexity of the agent, one that is able to adapt and adjust. It would require greater computational power and, hence, more physical resources. The physical implementation, for example, would consume more energy and might be heavier, which, in turn, would require a more robust body, causing it to move slower. It is very much a cause-and-effect relationship.”  In his research Scheutz models control systems of artificial agents in artificial environments in order to develop an understanding of the possible evolutionary trajectories of such systems. Using an artificial life simulator to model agent behavior, Scheutz and his students hope to answer biological questions regarding the evolution of agent control systems in nature, in particular, “affective control” -- behavior initiated, modulated, or interrupted by primitive emotional states like fear or anger. Why, for example are there hundreds of thousands of simple species with low-level affective control systems in nature, such as insects, and only a few species that have higher reasoning capacities? “We want to understand the circumstances in which those higher creatures evolved,” says Scheutz. The work will also assist in the development of artificial agents in the real-world -- robots. “We are trying to assess the situations in which it is advantageous for a robot to have more sophisticated skills,” explains Scheutz. “How much more does it cost -- in terms of time, energy, and money -- for a robot to be able to plan ahead, think more, reason instead of simply performing a rote function?” According to Scheutz, once the trade-offs have been worked out, it may be possible ... depending on the task the robot has to perform ... to use a less powerful, less sophisticated control system. An autonomous robot used for mowing the lawn, for instance, could be equipped with a simple biologically motivated control system that would guarantee good coverage of the lawn area while being very inexpensive in terms of initial cost and overall energy consumption. |

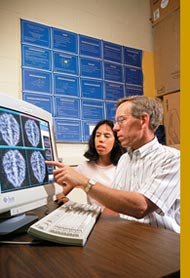

Sauer’s tomographic research, supported by the Indiana 21st

Century Research and Technology Fund and the General Electric Corporation

(GE) and in collaboration with researchers from Indiana University

and Purdue University, focuses on two types of imaging: emission

and transmission. In transmission imaging, such as an X-ray or CT

scan, the image is constructed from the amount of radioactivity that

transmits through the patient. In emission imaging, such as positron

emission tomography (PET), the patient either inhales or is injected

with a radioactive isotope whose subsequent emissions can be measured

by medical personnel using the scanning equipment. For example, some

tumors absorb certain types of glucose at higher rates than healthy

tissue. When this glucose is tagged with radioactive tracers, the

signals sent back during the scan can help identify the location

and size of the tumor, as well as how active it is.

Sauer’s tomographic research, supported by the Indiana 21st

Century Research and Technology Fund and the General Electric Corporation

(GE) and in collaboration with researchers from Indiana University

and Purdue University, focuses on two types of imaging: emission

and transmission. In transmission imaging, such as an X-ray or CT

scan, the image is constructed from the amount of radioactivity that

transmits through the patient. In emission imaging, such as positron

emission tomography (PET), the patient either inhales or is injected

with a radioactive isotope whose subsequent emissions can be measured

by medical personnel using the scanning equipment. For example, some

tumors absorb certain types of glucose at higher rates than healthy

tissue. When this glucose is tagged with radioactive tracers, the

signals sent back during the scan can help identify the location

and size of the tumor, as well as how active it is.