ND LR Printer

TraceLab Components for Generating Speech Act Types in Developer

Question/Answer Conversations

Rrezarta Krasniqi, Collin

McMillan

ND LR Printer Component

The ND LR Printer Component will receive as input the String from ND LR

File Parser Component and it will print the contents of such string to

TraceLab's log space and TraceLab's workspace. This component does not

require any input from the user.

Observe the following items for an explanation about the ND LR Printer

component:

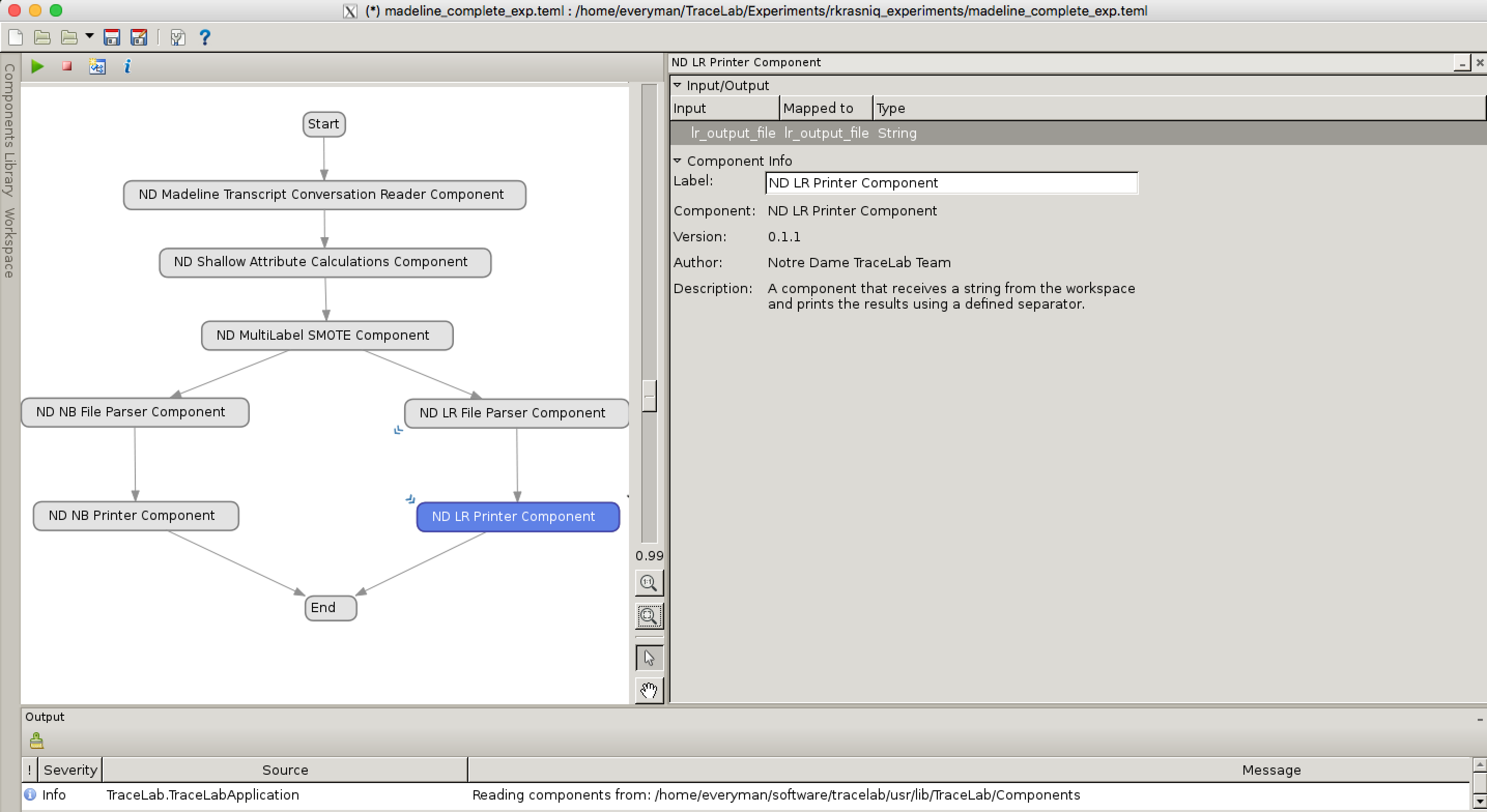

1. When

clicking the 'i' button of the component the box to the most right will

appear. This box contains all the details of this component.

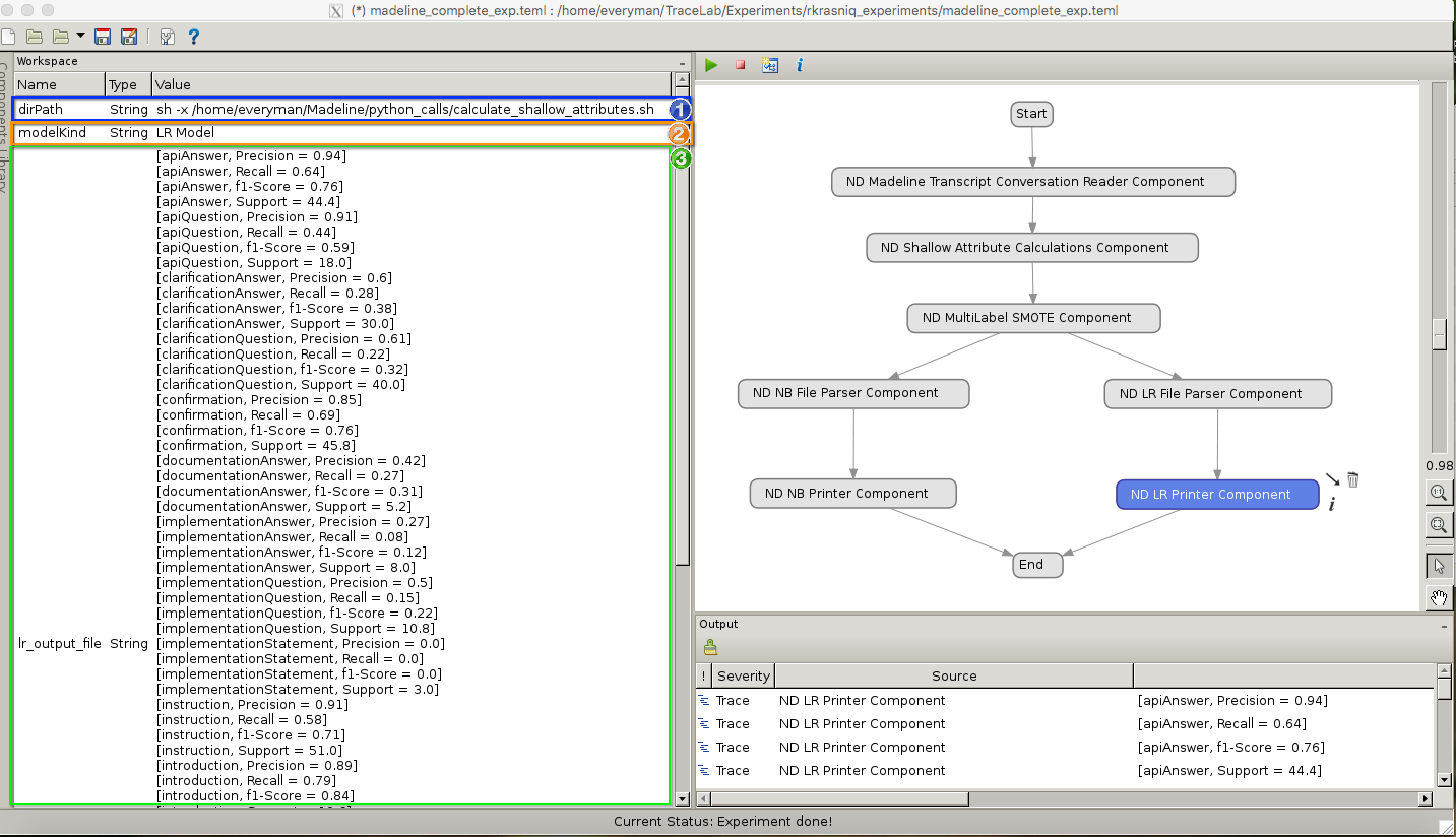

2. The following image shows the

information regarding the input of the component. The variable "lr_output_file"

is populated by the contents of tracelab's workspace.

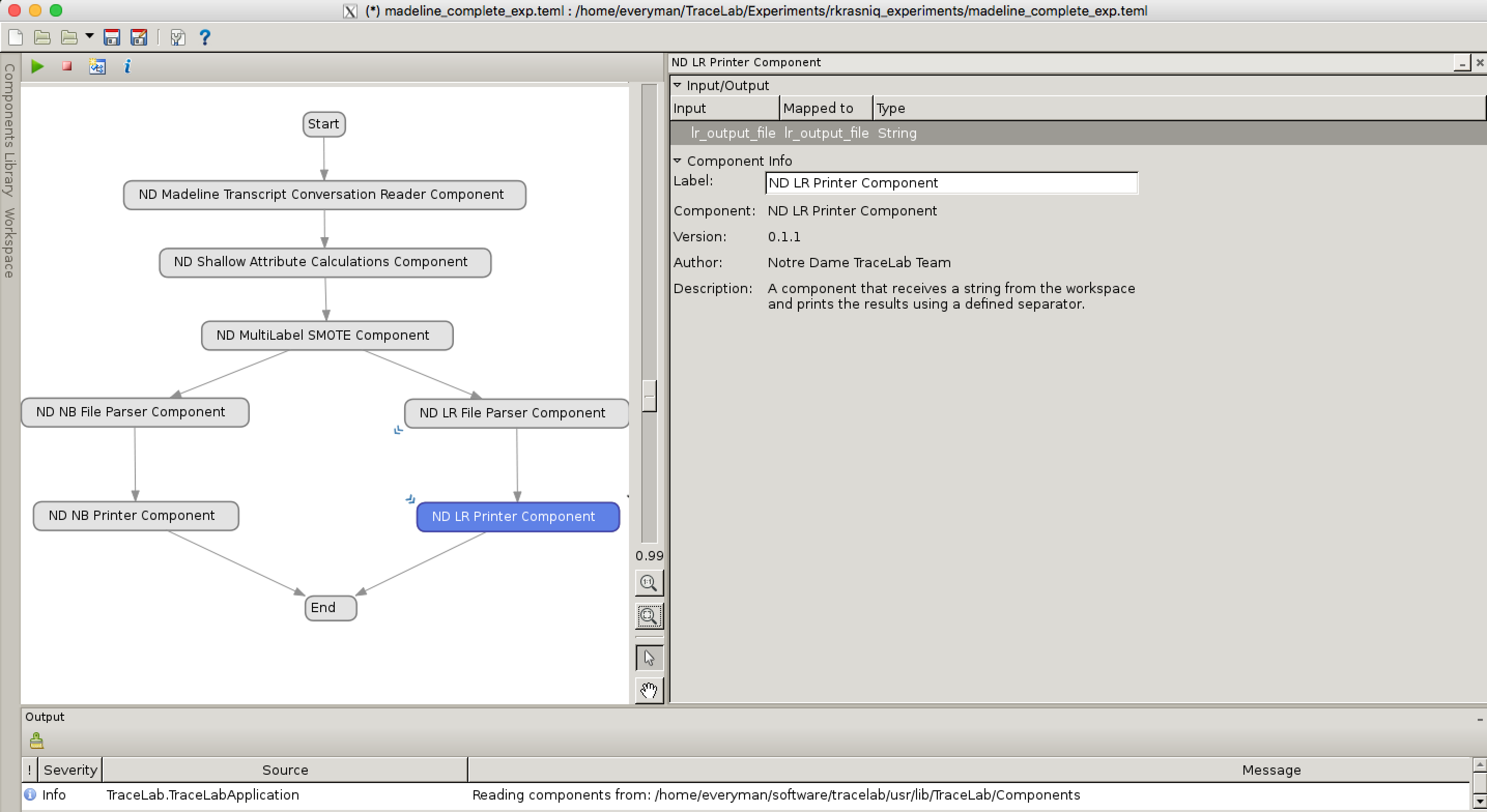

3. The following image shows the

information regarding label of the component.

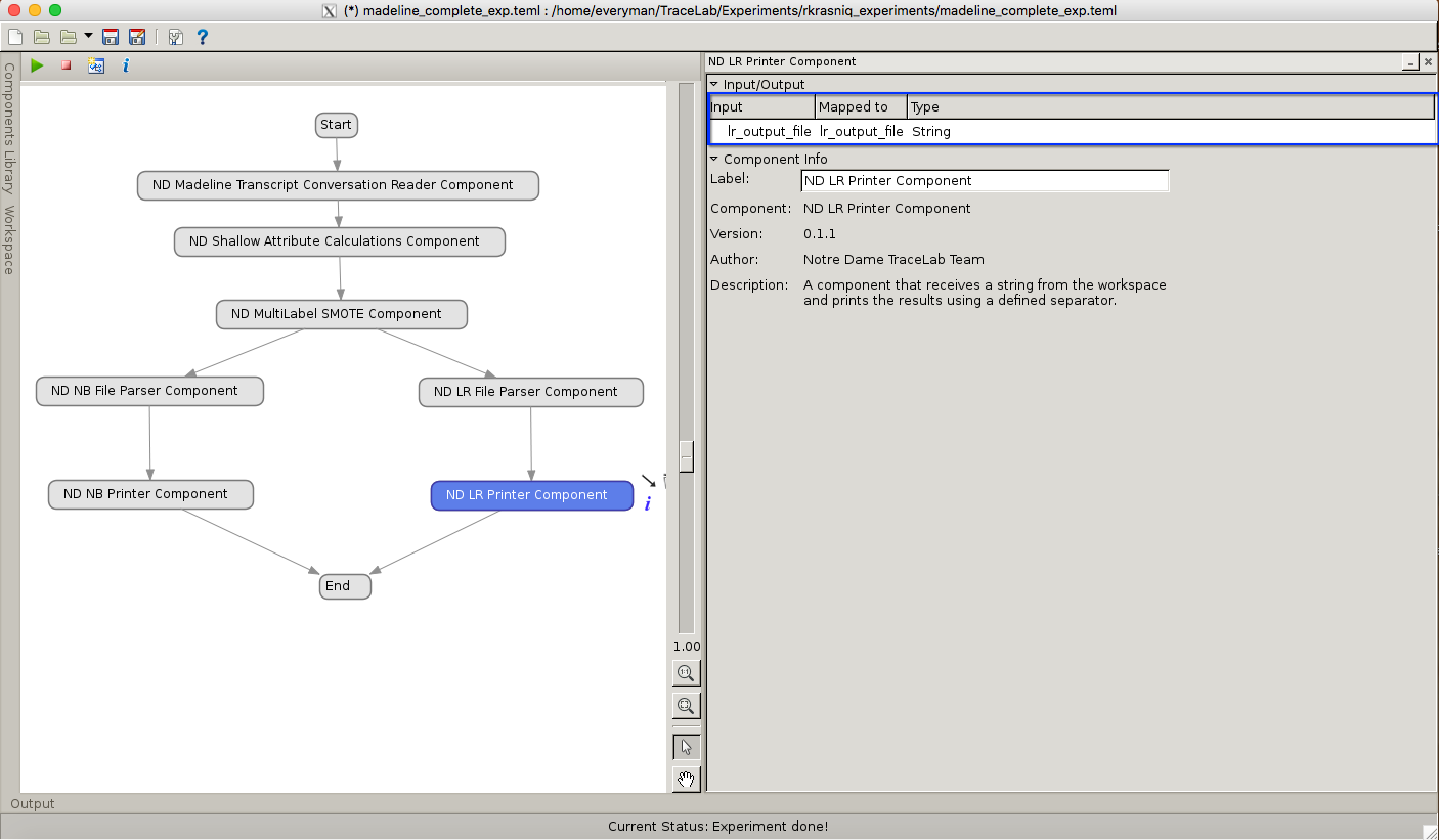

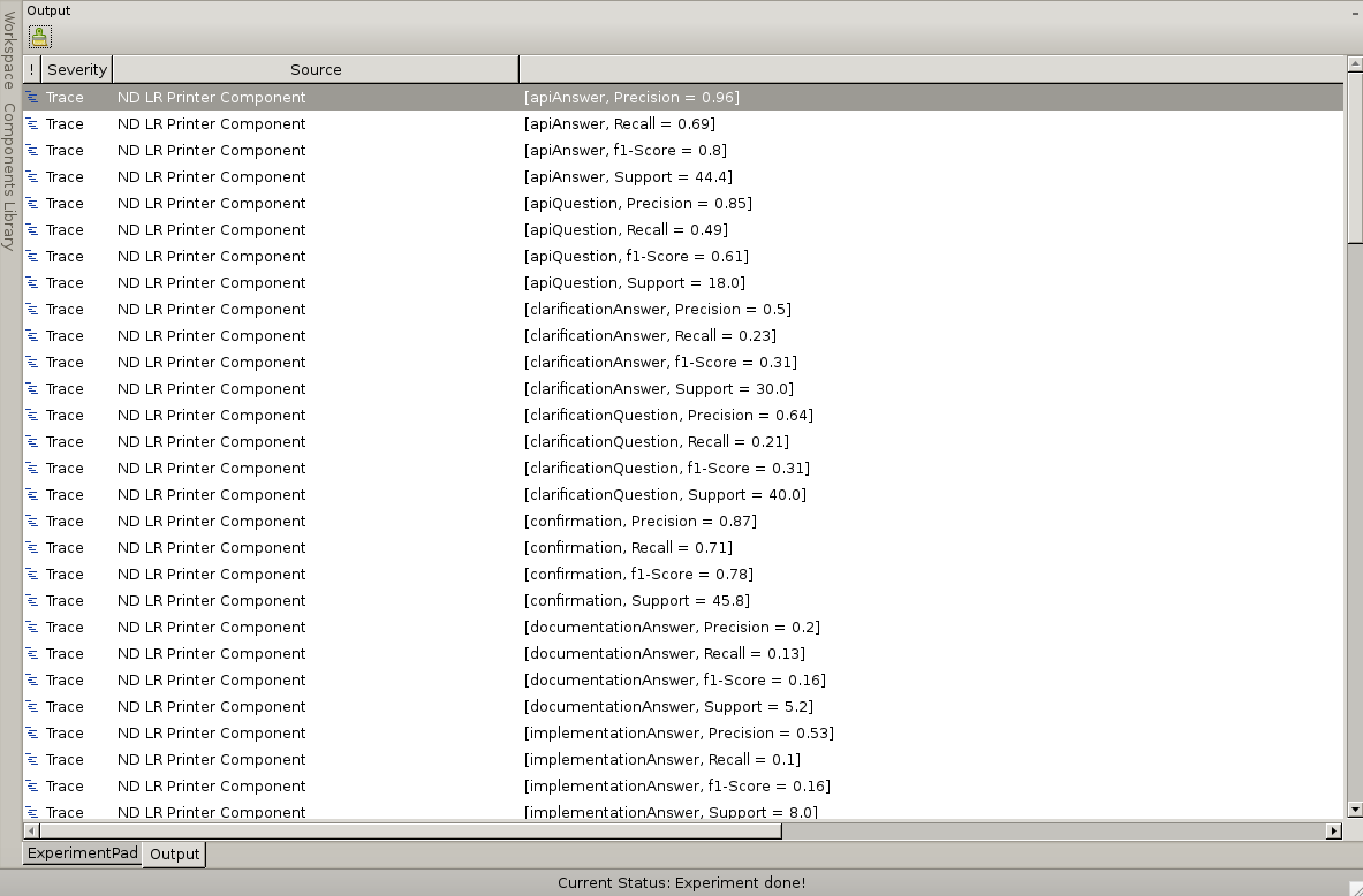

4. The following image shows the output of

the tool printed to TraceLab's log space. Note that the output shows the

generated speech acts (e.g., apiAnswer, clarificationQuestion,

confirmation, etc) of different types and their corresponding

performance metrics (ie., precision, recall, f-1-score

and support).

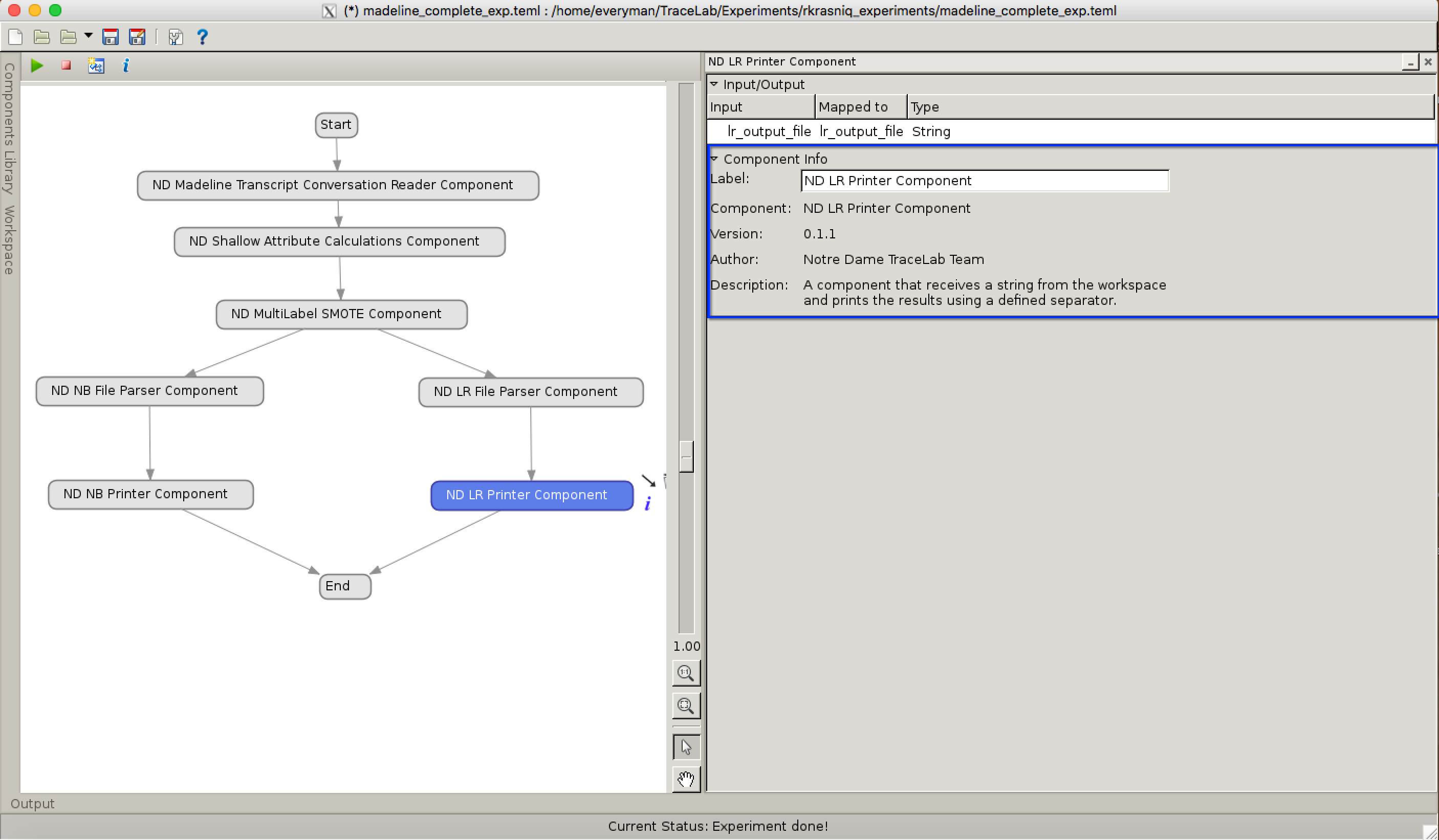

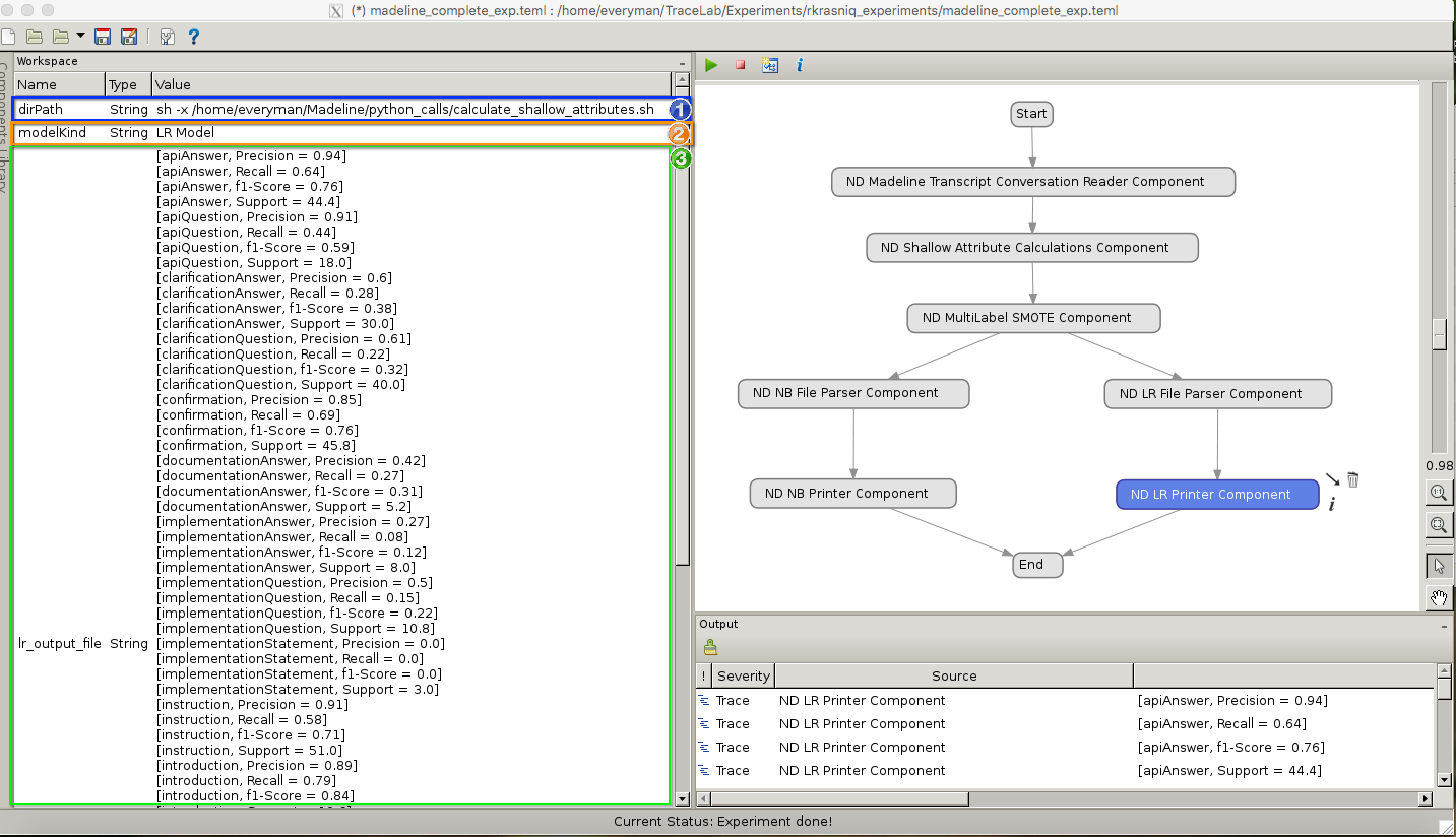

5. The following image shows the output of

the tool printed to TraceLab's workspace. The values stored in workspace

are: 1). directory path that calls python scripts to calculate shallow

attributes; 2). The prediction model selected by the user to run the

experiment and 3). The results showing performance metrics

calculated for each speech act type.

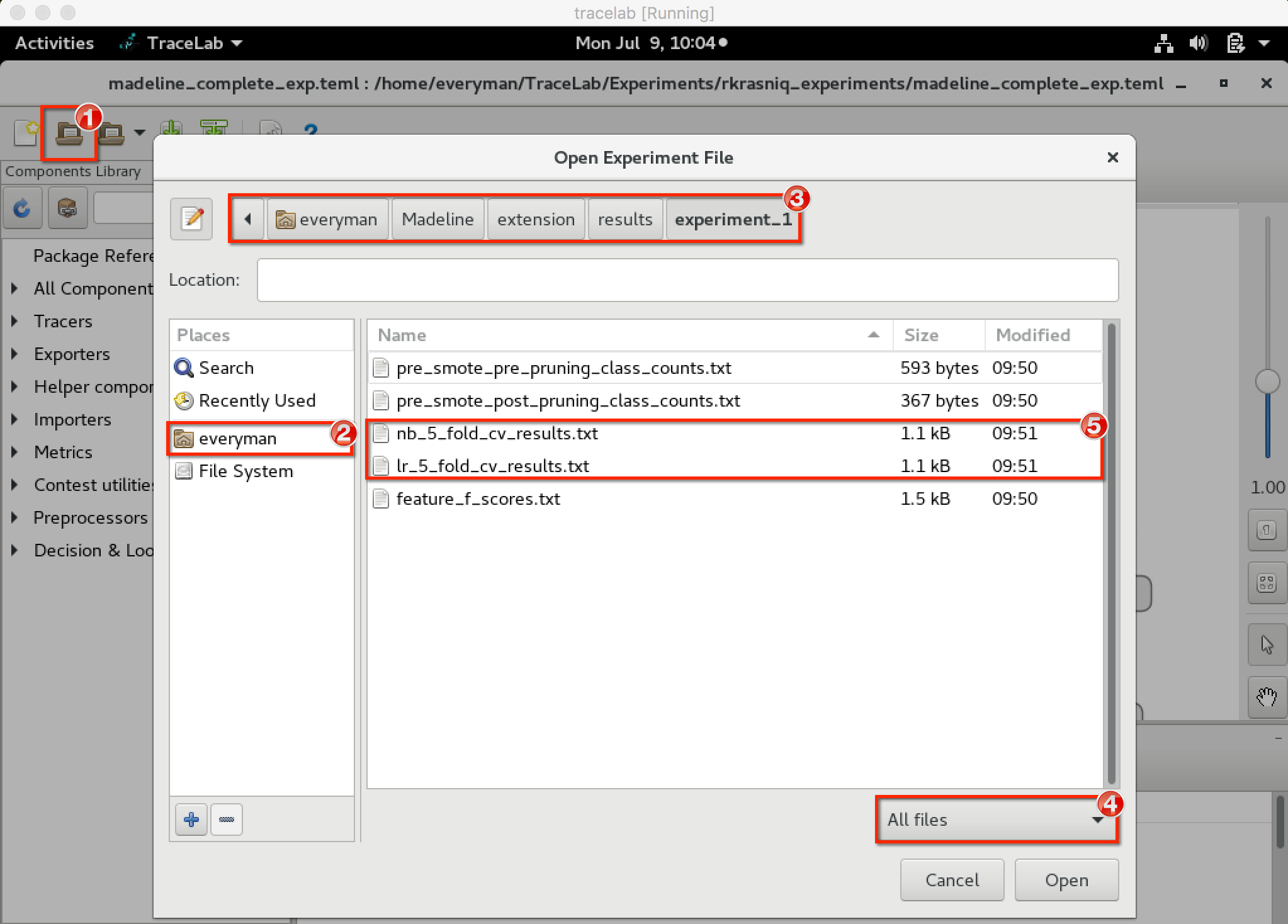

6. The same output results also can be found in the directory:

/home/everyman/Madeline/extension/results/experiment_1.

To locate the output files, follow steps labeled (1) -- (5):

- Open icon label (1)

- Open everyman folder -- label (2)

- Follow the directory path -- label (3).

- Change default ''Experiment files (.teml)" to "All files" -- label

(4)

- Open output file:

- lr_5_fold_cv_results.txt -- label (5)

Details about Data

The data that are being used to generate the speech acts are:

- Skype transcripts (found at: /home/everyman/Madeline/data/SkypeTranscripts).

Skype transcripts are dialogues collected by virtual assistant and

programmer. Programmer's role was to ask "virutal assistant" questions

during the bug repair. Questions were of different type such as:

implementation questions or clarification questions, .etc. The

"virtual assistant's" role was to assist with hints to guide the

programmer to repair the bug.

- codes.csv -- is the file that contains the

listing of all participants along with annotation labels (e.g,

clarificationQuestion, implementationQuestion, etc).

- participant1.txt. . .participant30.txt - are the

files that provide conversations collected between participant

and virtual assistant.

- results.csv -- is the file that contains each

line of text (i.e. speech act) that needs to be classified and its

corresponding annotation label

that is expected

Generated data are:

- Performance metrics calculated for each speech act type (found at:

/home/everyman/Madeline/extension/results/).

- nb_5_fold_cv_results.txt

- lr_5_fold_cv_results.txt

- Prediction

where each speech act type is the text to be classified and the annotated

label is computed indirectly through a python call via "ND

Shallow Attribute Calculations Component".

- shallow_attribute_calculations.py (found at: /home/everyman/Madeline/extension)

- shallow_attribute_calculations.py - invokes:

- experiment_handles.create_cv_experiment_function(experiment_classifiers)

from:

- function_handles.py (found at:

/home/everyman/Madeline/experiment_handles/). Finally

the resulting list of classifiers are then tested through 5 fold

cross-validation.

Further details about data interpretation are provided in the FSE'18

paper. Please, see section 9 (page 9 and 10), respectively,

subsections: 9.1, 9.2 and 9.3.

Table of Contents

Downloads