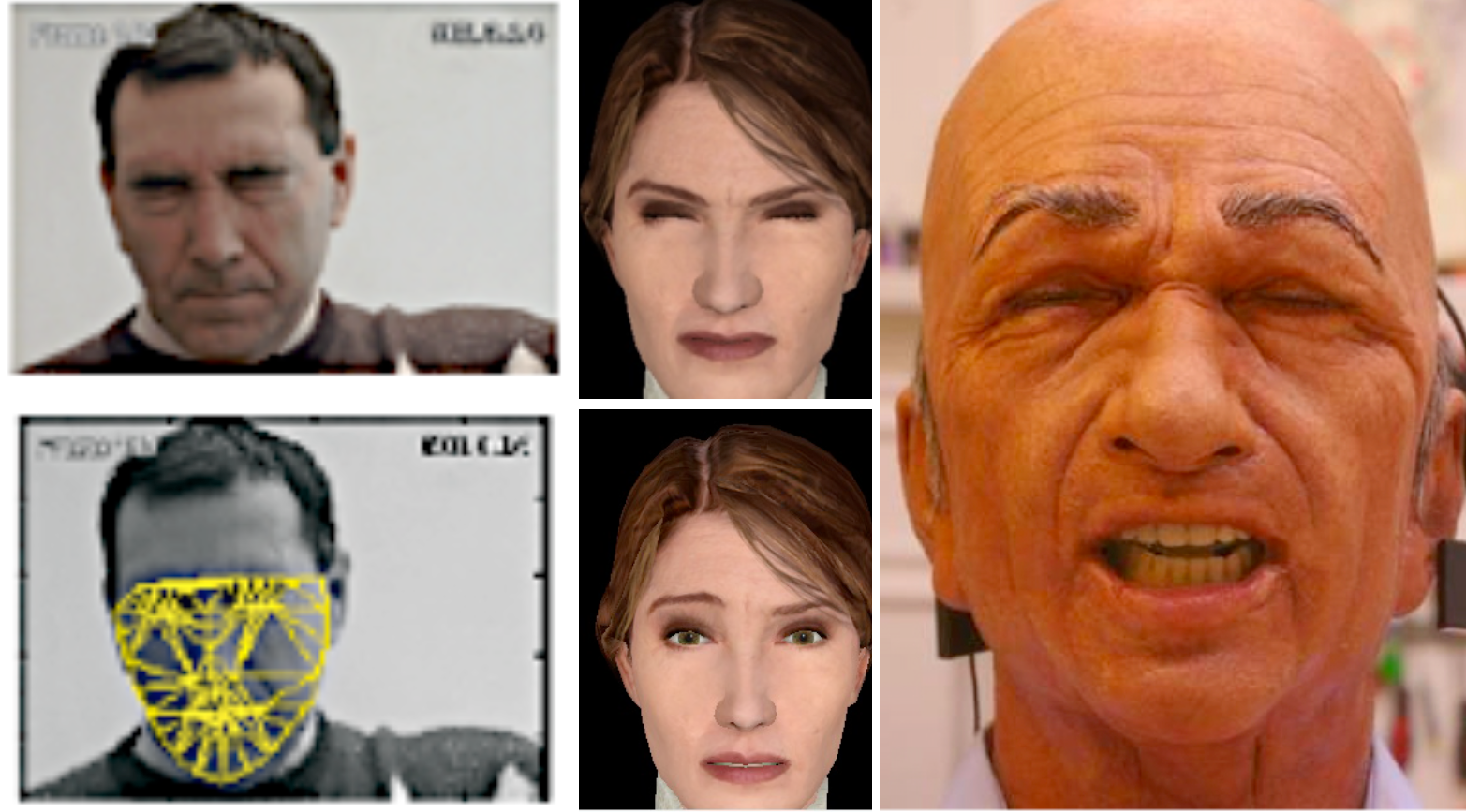

Next-Generation Patient Simulators

Over 400,000 people die every year in the US due to preventable medical errors. One way to prevent these errors is to teach clinicians to practice on robotic patients before treating real ones, and hundreds of thousands of doctors, nurses, EMTs, and firefighters train on these systems. Although perhaps the most commonly used android robots in America, a critical technology gap is that none of the commercially available HPS systems exhibit realistic facial expressions, gaze, or mouth movements, despite the vital importance of these cues in how providers assess and treat patients. We are investigating the use of a highly expressive android robot capable of conveying patient pathologies to augment this training.

Computationally Modeling Joint Action

In order to realize the vision of socially intelligent machines, we must understand the actions and interactions of people in groups. We are computationally modeling both human-human and human-robot dyadic and group interaction on dimensions such as rapport, synchrony, and mimicry, across naturalistic and laboratory contexts.

Social Context Learning

In order for robots to be capable of working along side humans, it is important they are able to understand social context, including includes situational context, social norms, social roles, and cultural conventions. We are exploring novel methods which utilize high-level context to learn an appropriateness function to inform robot actions.

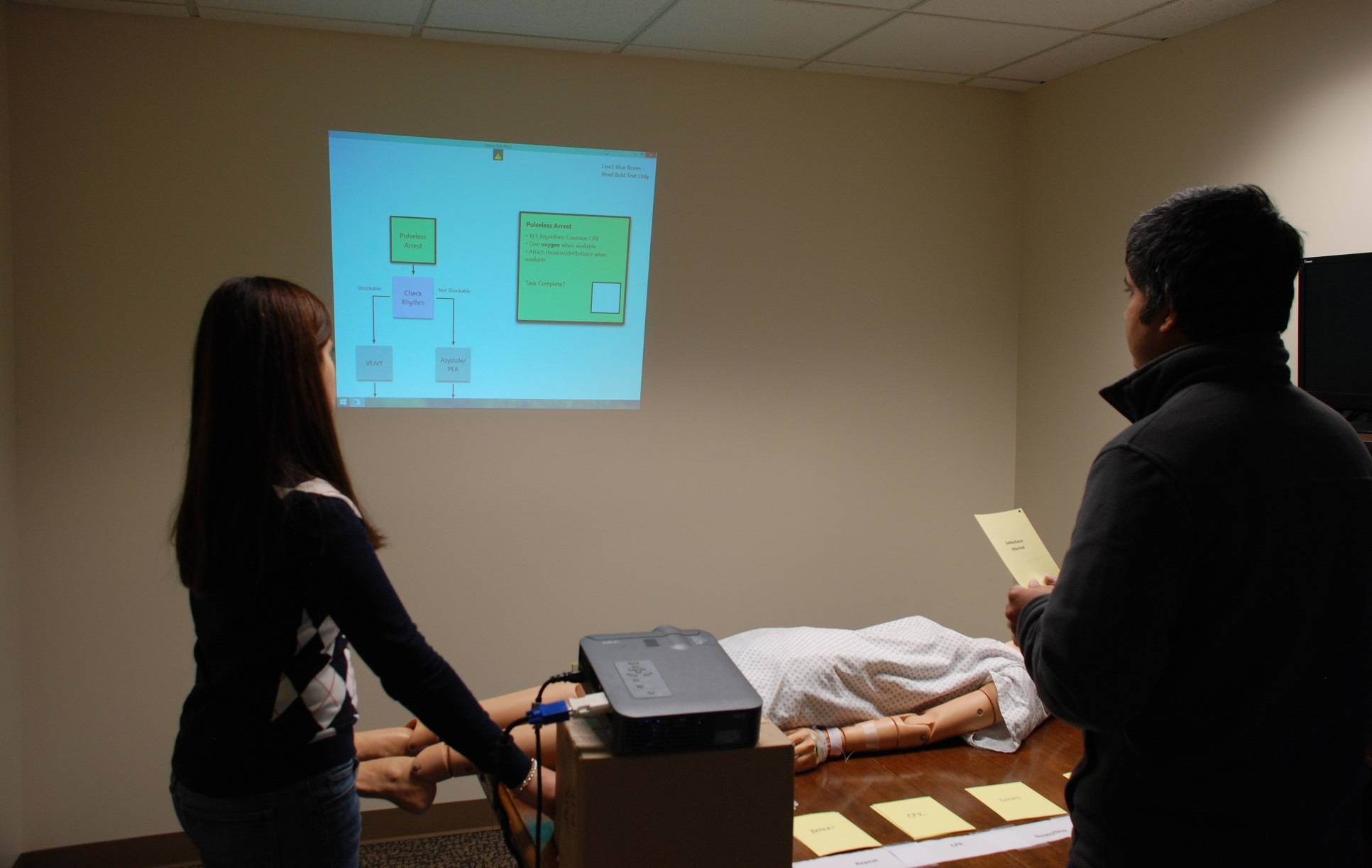

Pervasive Systems in Healthcare

Up to 400,000 people may die each year as a result of preventable medical errors, many resulting from miscommunication and low situational awareness among providers. We are exploring the use of interactive shared displays to facilitate situational awareness in collaborative tasks and procedures in medical settings.

Implicit Learning for Human-Robot Interaction

Learning from demonstration is an optimal way for people to teach robots. However robots will not always behave correctly, which may cause user frustration. Our work explores novel ways for a robot to understand and respond to implicit teacher cues (like frustration) in real-time to appropriately mitigate errors.

- © Robotics, Health, and Communication Lab

- Design: Michael J. Gonzales