Computationally Modeling Joint Action

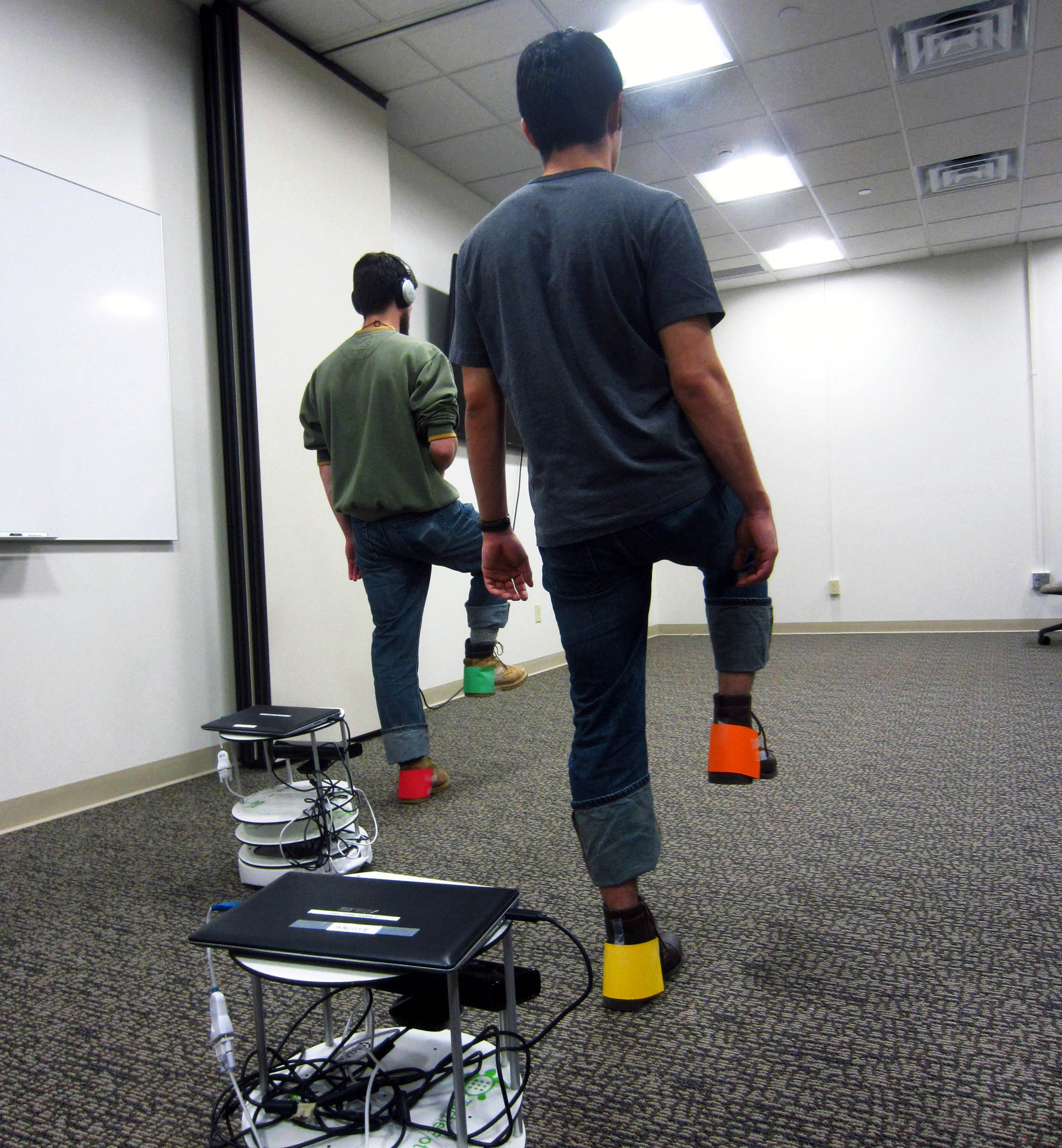

As robots become more commonplace in human social environments, it is important they are able to understand activities around them, and learn how to work well with human collaborators. We are working on ways to model joint action, which is coordinated behavior between two or more people.

Presently, we computationally model both human-human and human-robot group interaction across multiple naturalistic contexts, including: psychomotor synchrony, clinical teamwork, and face-to-face interaction. We are currently working on several projects that aim to automatically identify and inform synchronous actions in stationary, mobile, and real-time systems.

Recent Publications:

Iqbal, T. and Riek, L.D. "A Method for Automatic Detection of Psychomotor Entrainment". In IEEE Transactions on Affective Computing, doi: 10.1109/TAFFC.2015.2445335, 2015. [pdf]

Iqbal, T., Gonzales, M.J., and Riek, L.D. "Joint Action Perception to Enable Fluent Human-Robot Teamwork". In Proceedings of the 24th IEEE International Conference on Robot Human Communication (RO-MAN), 2015.

Rack, S., Iqbal, T., and Riek, L.D. "Enabling Synchronous Joint Action in Human-Robot Teams". In Proceedings of the 10th ACM/IEEE Conference on Human-Robot Interaction (HRI), 2015.